The Dark Side of Organizations and a Method to Reveal It

David A. Bella

Oregon State University, USA

Jonathan B. King

Oregon State University, USA

David Kailin

Oregon State University, USA

Introduction

The ability to see the larger context is precisely what we need to liberate ourselves. (Milgram, 1992: xxxii)

Few who have read Stanley Milgram's book, Obedience to Authority (1974), or have seen videos of these “shocking” experiments can forget them. In our view, Milgram's experiments offer important lessons about contexts, human behaviors, and the role of contexts in setting boundary conditions around such behaviors. This article takes these lessons seriously. But first, a succinct summary of the experiments.

A “teacher” is instructed by the “scientist-in-charge” to administer an electric shock to a “student” every time he gives a wrong answer—which is most of the time. The teacher is given a list of questions in advance. The electric shocks range from 15 to 435 volts and are visibly displayed on a panel facing the teacher: Slight 15+ … Intense 255+ … Danger 375+ … XXX 435. The student—a superb actor who is not actually shocked—starts to grunt at 75 volts. He follows a standard script.

At 120 volts he complains verbally; at 150 he demands to be released from the experiment. His protests continue as the shocks escalate, growing increasingly vehement and emotional. At 285 volts his response can only be described as an agonized scream. (Milgram, 1974: 4)

And that's with 150 volts yet to go! At the high end, the student is dead silent. What happens if the teacher (repeatedly) objects? The scientist is only allowed to “prompt” her or him with such comments as, “Please continue, please go on,” “The experiment requires that you continue,” “You have no other choice, you must continue.” No threats, no demeaning remarks about the student; just calmly stated reasons why the teacher should continue.

So, at what point would you or I stop? The bad news is that over 60 percent of us go all the way even when we can hear the student screaming. The really bad news is the disparity between our actual behaviors and the predictions of “psychiatrists, graduate students and faculty in the behavioral sciences, college sophomores, and middle-class adults.”

They predict that virtually all subjects will refuse to obey the experimenter; only a pathological fringe, not exceeding one or two percent, was expected to proceed to the end of the shockboard. The psychiatrists… predicted that most subjects would not go beyond the 10th shock level (150 volts, when the victim makes his first explicit demand to be freed); about 4 percent would reach the 20th shock level, and about one subject in a thousand would administer the highest shock on the board. (Milgram, 1974: 31)

Why the stunning disparity? What are we overlooking? For starters, how did Milgram interpret the significance of such unexpected findings?

I must conclude that Arendt's conception of the banality of evil comes closer to the truth than one might dare imagine… This is, perhaps, the most fundamental lesson of our study: ordinary people, simply doing their jobs, and without any particular hostility on their part, can become agents in a terrible destructive process… Men do become angry; they do act hatefully and explode in rage against others. But not here. Something far more dangerous is revealed: the capacity for man to abandon his humanity—indeed, the inevitability that he does so—as he merges his unique personality into larger institutional structures. (Milgram, 1974: 6, 188)

“Larger institutional structures”? What are such things? And why are we apparently blind to the emergence of their dark side?

We propose that the first general lesson to be drawn from Milgram's experiments is that contexts are powerful determinants of human behavior. In his experiments, Milgram essentially constructed a context. And subjects found it extremely difficult to act out of context—to refuse to continue the testing. A second general lesson is that the power of context to shape human behavior has been vastly underestimated if not overlooked entirely. For Milgram's work also demonstrates that when the experiments were described to people—including experts—virtually all failed to foresee anything remotely close to the compliance that actually occurred. A third lesson that we shall literally illustrate is that Milgram's experimental results not only extend to and pervade human existence, but that such contexts are typically neither the result of deliberate design nor otherwise intended. Instead, they emerge.

This article presents a method to see past the business that preoccupies us to expose the character of contexts that promote compliance no less disturbing than the compliance of Milgram's subjects. While disarmingly simple, this method is far from simplistic, for it allows us to illustrate the patterns that lie behind the countless tasks of ordinary people who are simply doing their jobs, getting by, and struggling to succeed. From such patterns, great harm can emerge. But within the context of such patterns, one finds individuals who are hard-working, competent, and well-adjusted. The key to understanding such claims is to take emergence very seriously.

Put bluntly, outcomes that we consider harmful, distorting, and even evil can and too often do emerge from behaviors that are seen as competent, normal, and even commendable. These emergent outcomes cannot be reduced to the intentions of individuals, but, more disturbingly, dark outcomes can emerge from interactions among well-intended, hardworking, competent individuals. Such phenomena do not require the setting of Milgram's experiment—the “authority” of a laboratory complete with a “scientist” dressed in a white lab coat—quite the contrary. They are an everyday feature of our lives. Therefore, rather than focusing on the actions of irresponsible individuals, we must—most importantly—attend to the contexts within which normal, well-adjusted people find good reasons for behaving as they do.

SKETCHING CONTEXT

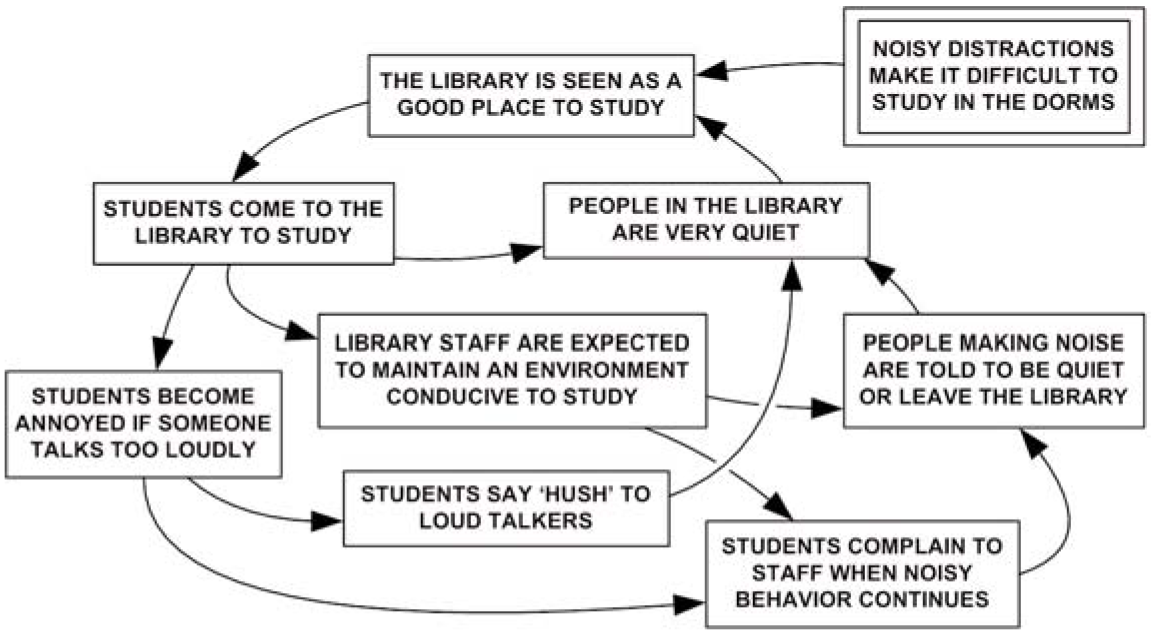

Consider the normal behavior of well-adjusted students in a university library. At a football game, these students yell and cheer. At the library they do not. Why? The behavioral contexts are different and—this is a key claim—contexts shape behavior. To understand this claim, consider a sketch of the library context given in Figure 1. To read this sketch, begin with any statement of behavior. Read forward or backward along an arrow to the next statement. Say “therefore” when you move forward along an arrow and “because” when you move backward. Wander through the entire sketch, moving forward and backward along the many loops, until you grasp the character of the whole. Please note: If you do not work through the sketches in this manner, you will likely misperceive the fundamental claims of this article.

Figure 1 A sketch of the university library context Read forward (say “therefore”) or backward (say “because”)

Yes, there are rules for proper behavior within the library, but very few read them. Instead, we find that amid the busy activities of students, general behaviors tend to settle into mutually reinforcing patterns. These emergent patterns constitute the context. Figure 1 therefore serves to explain not the specific behaviors of particular students, but rather the context that sustains normal behaviors as many students come and go.

Table 1 outlines the general method of sketching applied to Figure 1. Column A describes how human behaviors, in general, respond to any given context. Column B describes a disciplined approach to sketching that expresses the general behaviors given in Column A. Read these two parallel columns and note their relationships. Together they show that the way we behave within a context (Column A) can be sketched by the method given in Column B.

This method of disciplined sketching exposes behavioral patterns that are typically taken for granted. Such patterns, we claim, constitute behavioral contexts. Figure 1 is an example. Within this context, students find good reasons not to yell and cheer. This is what contexts do: They provide reasons for some kinds of behaviors and not others.

By using information relevant to the context of interest, this method can uncover the character of different contexts. Table 1 provides a discipline to such an inquiry. First, we are led to look for persistent behaviors and to express them in general terms. Second, we are forced to seek reasons—not “causes” but “reasons”—for such behaviors, reasons that make sense from the perspectives of those acting out the behaviors. Having done this, we make a sketch under the guidance of Table 1. Figure 1 was sketched (after many revisions) in this manner. Notice that, with the exception of the “given” (double-lined box), all the behaviors must meet two commonsense guidelines:

- Behaviors that tend to persist (keep coming up) do so because they have reasons that make sense to those acting out the behaviors.

- Behaviors that tend to persist have consequences that tend to persist.

| A Within a given context | B To develop a sketch of a given context |

|---|---|

| Some behaviors and conditions tend to persist and reoccur | Place simple descriptive statements of behaviors (conditions) in boxes; statements should make sense to those involved |

| Persistent and reoccurring behaviors (conditions) are supported by reasons that make sense to those involved | Each boxed statement should have at least one incoming arrow from a boxed statement that provides a reason that makes sense to those involved* |

| Persistent and reoccurring behaviors (conditions) have consequences that are also persistent and reoccurring | Each boxed statement should have at least one outgoing arrow pointing to a boxed statement that is a consequence |

*Occasionally a given statement can be employed without an incoming arrow, indicating that the reasons lie beyond the scope of the sketch

Table 1 General observations of human behaviors (Column A) and related guidelines for sketching (Column B)

The first guideline is met when each behavior statement (except the “given”) has at least one incoming arrow (a reason). The second guideline is met when each behavior has at least one outgoing arrow (a consequence). The patterns that emerge from these guidelines take the form of loops. Our method of sketching serves to uncover such patterns, exposing the fundamental character of a context that is often hidden in countless distracting details.

Notice some features of this simple sketch. The “elements” or “components” of the system, the boxed statements, do not refer to “agents” or to groups of people. Neither do these boxes represent “storage tanks” as in stock-and-flow models. They are not “control volumes” as often employed in the derivation of differential equations. Instead, the boxes describe behaviors or behavioral conditions. In turn, the arrows do not represent transfers (inputs and outputs). Instead, when read backward an arrow gives a reason; when read forward it gives a consequence. Notice also that the figure reads in natural or ordinary language. This allows readers quickly to grasp the pattern as a whole without struggling with unfamiliar notations, jargon, or symbols. Such sketches involve only a few statements—usually fewer than 14—so that the reader is drawn not to details but to the pattern as a whole. Sketches gain validity when people who have been involved within the context recognize it within the sketch. Finally, notice the form of the pattern: multiple loops of mutually reinforcing behaviors.

In sum, we claim that emergent outcomes in human affairs appear in such forms and that such forms become apparent through the application of this method. We will now show how this sketching method serves to expose a whole class of problems that are commonly overlooked and misperceived.

SIMPLE AND COMPLEX PROBLEMS

Imagine that a student does yell and cheer in the library; that is, that he or she acts out of context. This would constitute a problem—a condition that demands attention. “But,” you might respond, “we don't need such sketches to notice, let alone understand, this kind of problem.” We agree! The out-of-context (improper, maladjusted) behavior clearly stands out without the need of a method. So, when is this method of exposing context important? Why bother sketching loops if we don't need to? The answer becomes apparent when we recognize the difference between simple and complex human problems.

A problem arising from out-of-context behavior—a student shouting in the library—is a simple problem. Simple problems can be reduced to the improper behavior of the offending individuals. Thus, the problem lies in the part, not the whole. For complex problems, however, the condition that demands attention is the context as a whole. Unlike a maladjusted student yelling in the library, a complex problem arises from the well-adjusted behaviors of people acting within a context. Thus, the context itself demands our attention. However, unlike a shouting student, the context does not stand out in general, let alone as something abnormal in particular. Quite the opposite! The context defines the norm and is usually taken for granted.

If all human problems were simple problems, then disciplined sketching would be of little use. But if complex problems are both common and significant, then the patterns of normal and well-adjusted behaviors should concern us. Such patterns constitute the contexts that normal and well-adjusted people take for granted. Context defines normal behaviors. There are few things that can hide and sustain a problem as well as normalcy. We will now apply this method to a complex problem that is serious, widespread, and sustained by the normal behaviors of well-adjusted people.

THE SYSTEMIC DISTORTION OF INFORMATION

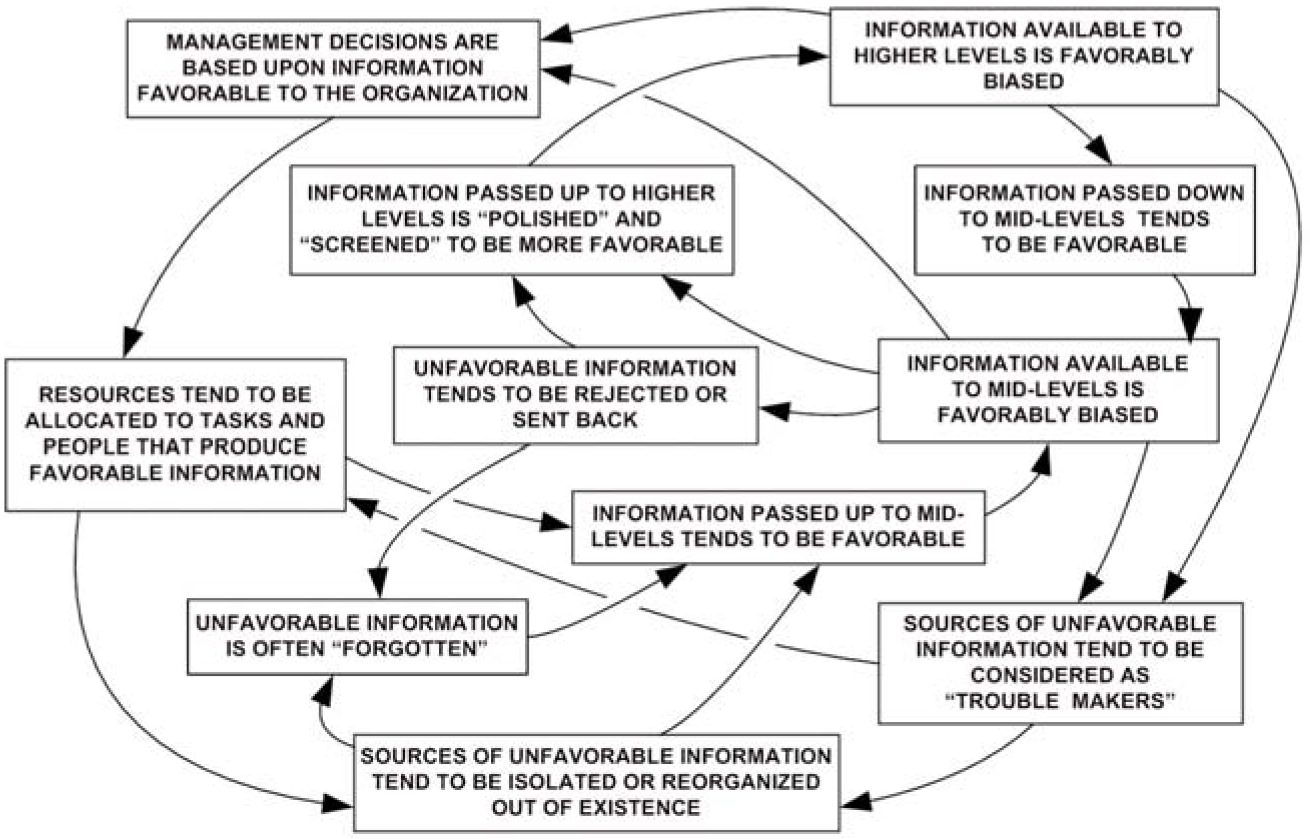

Clearly, information can be distorted through the willful intent of individuals. Without denying such willful distortions, we claim that information distortion can also emerge as a complex problem that cannot be reduced to the intentions of individuals. Figure 2 illustrates such systemic distortions. This sketch was selected because it has been peer reviewed by practitioners from a wide range of disciplines and appears to describe a pervasive, complex problem (Bella, 1987, 1996).

Wander through the entire sketch, moving forward (“therefore”) and backward (“because”) along the many different loops, until you comprehend the whole picture. You can sense how information favored by “the system” serves to support, sustain, promote, and propagate the system. Such information is more readily sought, acknowledged, developed, and distributed, while unfavorable information has more difficulty coming up, going anywhere, or even surviving.

Such contexts and the information they sustain shape the premises and perceptions of those involved, instilling within them what are taken for granted as proper and acceptable behaviors. Those who raise troubling matters, questions that expose distortions, are out of context. As with simple problems, they become the problem “trouble-makers.” They may face personal criticism, being charged with improper, even “unethical,” behavior. In the private sector, the possibility of legal action—“You'll hear from our lawyer”—can be very threatening. However, the problem sketched herein is not a simple problem; the context itself is the problem.

Now conduct the following exercise. Imagine that you have reviewed a recent report describing the consequences of an organization's activities (environmental impacts, as an example). You are upset to find that information concerning adverse consequences was omitted. You question the individuals involved. Your questions and their answers are given in Table 2. As you read their answers, keep in mind the context sketched in Figure 2. Notice that the answers given in Table 2 make sense to those acting within this organizational context. In sum, the problem—systemic distortion—emerges from the context (pattern, system) as a whole and cannot be reduced to the dishonest behaviors of individual participants. Unlike the library context, systemic distortion of information is a complex problem where the context itself demands our attention.

Figure 2 The systemic distortion of information (Bella, 1987)

The larger point is that complex problems are particularly dangerous because the individual behaviors that lead to them do not stand out as abnormal—as out of context. Quite the contrary, for as illustrated in Table 2, each individual finds good reasons for his or her behavior within the context. Indeed, distortions can become so pervasive and persistent that views contrary to—that don't fit within—the prevailing patterns are dismissed as misguided and uncalled for.

While such distortions can harm the organizations that produce them (Larson & King, 1996), they can also serve to propagate the systems that produce them, covering up adverse consequences and externalized risks. As a case in point, the model (Figure 2 and Table 2) was originally developed in 1979 to describe the frustrations of professionals involved in the assessments of environmental consequences. Here, the primary concern was self-propagating distortions that benefited the organizational system that produced them while allowing harmful consequences to accrue in the larger environment and society.

| Person in the system | Question | Assumed answer to question |

|---|---|---|

| Higher-level manager | Why didn't you consider the unfavorable information your own staff produced? | I am not familiar with the information that you are talking about. I can assure you that my decisions were based upon the best information available to me. |

| Midlevel manager | Why didn't you pass the information up to your superiors? | I can't pass everything up to them. Based upon the information available to me, it seemed appropriate to have this information reevaluated and checked over. |

| Professional technologist | Why wasn't the unfavorable information checked out and sent back up to your superiors? | That wasn't my job. I had other tasks to do and deadlines to meet. |

| Trouble-maker | Why didn't you follow up on the information that you presented? | I only worked on part of the project. I don't know how my particular information was used after I turned it in. I did my job. Even if I had all the information, which I didn't, there was no way that I could stop this project. |

| Higher-level manager | Why has the organization released such a biased report? | I resent your accusation! I have followed the development of this report. I have reviewed the drafts and the final copy. I know that the report can't please everybody, but based upon the information available to me, I can assure you that the report is not biased. |

| Midlevel manager | Why has the organization released such a biased report? | It is not just my report! My sections of the report were based upon the best information made available to me by both my superiors and subordinates. |

| Professional technologist Trouble-maker Higher-level manager | Why has the organization released such a biased report? Why has the organization released such a biased report? Why was the source of unfavorable information (the trouble-maker) removed from the project? | It is not my report! I was involved in a portion of the studies that went into the report. I completed my tasks in the best way possible given the resources available to me. Don't ask me! I'm not on this project anymore and I really haven't kept up with the project. I turned in my report. It dealt with only a part of the project. I hardly know the person. A lot of people have worked on this project. I must, of course, make decisions to keep this organization running, but there has been no plot to suppress people! On the contrary, my decisions have been objectively based upon the available information and the recommendations of my staff. |

| Midlevel manager | Why was the source of unfavorable information (the trouble-maker) removed from the project? | I don't like your implications! I've got tasks to complete and deadlines to meet with limited resources. I can't let everybody do their own thing; we'd never finish anything. I based my recommendations and assignments on the best available information. |

| Professional technologist | Why was the source of unfavorable information (the trouble-maker) removed from the project? | I'm not sure about the details because I don't work with him. I guess it had to do with a reorganization or a new assignment. He is a bright person, somewhat of an eccentric, but I've got nothing personal against him. |

| Trouble-maker | Why were you removed from the project? | My assignment was completed and I was assigned to another project. I don't think that anybody was deliberately out to get me. My new job is less of a hassle. |

Table 2 Reasoning of participants within the context sketched in Figure 2 (Bella, 1987)

If all systemic distortions were self-harming, we would expect this complex problem to be self-correcting. But where distortions benefit, sustain, and promote the systems that produce them, the presumption of self-correction does not apply. These are arguably the most serious forms of systemic distortion, propagating the systems that produce them.

DISTORTION AND EMERGENCE

We realize that much more is involved in organizational systems than shown in this sketch (Figure 2). But, of course, complex systems are “incompressible” (Richardson et al., 2001). Thus, all models (sketches) are simplifications. A test of any model is: Does the model describe some matters of importance better than its chief rivals?

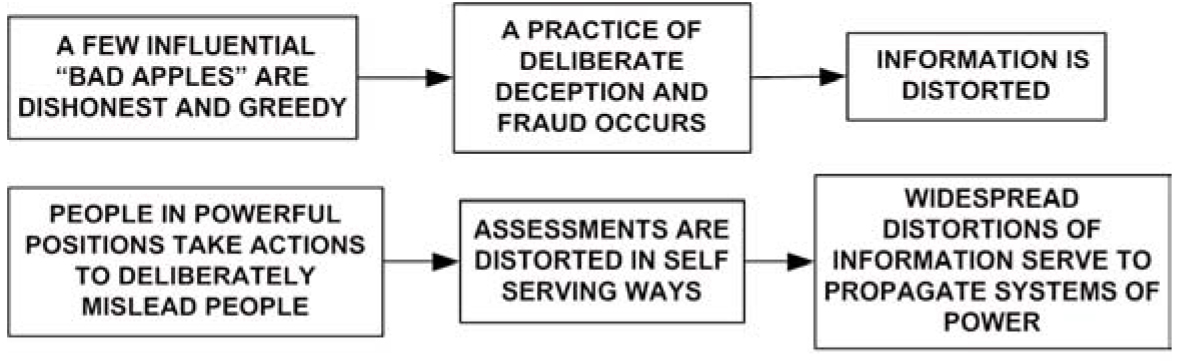

In the case of information distortion, the dominant rivals involve lines of reasoning as sketched in Figure 3.

There is much appeal to such straightforward reasoning. We can blame others and it requires little effort on our part. However, this ease of reasoning arises from linear presumptions. In brief, one presumes that wholes, systemic distortions, can be reduced to the character of parts, individuals. Ergo, blame (Figure 3). If the world were linear, such reasoning would make sense. But of course, if the world were linear we could be great musicians. On a grand piano we could play grand notes. Alas, the world is nonlinear and the sum of our grand notes does not add up to grand music! Clearly, the character of wholes cannot be reduced to the character of parts. Blame, like the pounding of individual notes, is a linear misperception that fails to conceive emergent wholes. By sketching whole behavioral patterns in a disciplined way, rather than focusing on parts (individuals), we can expose emergent phenomena not reducible to parts. However, to make such sketches, one must blame less and listen more.

Figure 3 Common and straightforward (linear) explanations of distortion

Compare Figures 1 and 2 on the one hand with Figure 3 on the other. Notice the strikingly different form of thought. Instead of a “line” or “chain” of reasoning (Figure 3), we find that human behaviors tend to settle into mutually reinforcing patterns (Figures 1 and 2). Behaviors continue because they are sustained by such patterns. Emergent behaviors arise from the patterns as wholes.

Concerning “emergence,” we are in agreement with the following general statements by John H. Holland (1998):

Recognizable features and patterns are pivotal in this study of emergence... The crucial step is to extract the regularities from incidental and irrelevant details… This process is called modeling… Each model concentrates on describing a selected aspect of the world, setting aside other aspects as incidental (pp. 4-5)… emergence usually involves patterns of interaction that persist despite a continuing turnover in the constituents of the patterns (p. 7)… Emergence, in the sense used here, occurs only when the activities of the parts do not simply sum to give activity to the whole (p. 14).

This modeling approach (disciplined sketching) is different from (and we believe complementary to) the approaches of Holland and others. Nevertheless, Holland's statements do apply to our notion of systemic distortions as emergent phenomena. Furthermore, we find that these sketches are quickly grasped by participants within organizational systems. Indeed, Figure 2 was sketched in response to the stories of frustration of people within actual organizational systems (Bella, 1987). Rather than dismissing their frustrations as evidence of “poor attitude” (a form of blame), we took them seriously and sketched the context that (a) made sense of credible frustrations and (b) avoided blame. In brief, amid the busy activities of countless people involved in endless tasks, general behaviors tend to settle into mutually reinforcing patterns. These patterns constitute the contexts for the normal behaviors of well-adjusted people. In scale, duration, and complexity, the distortions that can emerge from such patterns far exceed the capabilities of deliberate designs. This has radical implications that are easily overlooked by a more straightforward (linear) understanding of distortion (Figure 3).

IMPLICATIONS FOR OUR EMERGING TECHNOLOGICAL WORLD

In the modern world, getting a drink of water is such a simple thing. Merely turn the faucet. But this simple act depends on a vast technosphere far beyond the capacity of any group to design deliberately. The components of our faucet may come from distant parts of the world. Following the faucet back through pipes, pumps, electrical grids, power plants, manufacturing facilities, and mining operations, one encounters vast and interconnecting complexities in all directions. The technological, informational, and financial interconnections are bewildering and vast. Yet, somehow it all comes together so that you can turn on your faucet and get water.

This global technosphere requires the busy and highly diverse activities of countless people involved in endless tasks. If we focus on only one project manager on one of seemingly countless projects, we find remarkable abilities that few of us appreciate. The real challenge is not scientific analysis of some physical entity or device. Rather, the challenge is getting the right materials, equipment, people, and information together at the right place and at the right time within a world that is constantly changing and ripe with the potential for countless conflicts and misunderstandings. If one is not impressed by the abilities of successful project managers, one simply does not understand what is going on.

However, the scale and complexity of such human accomplishments—the continuously changing technosphere on which we depend—extend far beyond the abilities of any conceivable project management team. Our drink of water, indeed our very lives, depend on vast, complex, adaptive, and nonlinear (CANL) systems. They are self-organizing and continually adapting. Through them, highly diverse activities are drawn together and outcomes emerge far beyond the abilities of individuals and groups. The activities of numerous people, including our successful project manager, occur within the contexts sustained by such CANL systems. Indeed, contexts are CANL systems emerging at multiple levels, shaping behaviors in coherent ways despite vast differences among individuals and the tasks they perform.

A growing body of literature, popular and academic, has been rightly fascinated by the remarkable behaviors of CANL systems, which are not subject to our traditional analytical habits of thought (Kauffman, 1995; Waldrop, 1992; Wheatley, 1992). Richardson et al. (2001) write:

Complexity science has emerged from the field of possible candidates as a prime contender for the top spot in the next era of management science.

We agree. We are concerned, however, that too often the literature is silent on the dangers of emergent outcomes in human affairs. Indeed, some (Stock, 1993) treat emergent order in human affairs with a religious-like reverence. In our assessment, emergent outcomes can be powerful and they can also be dangerous, deceptive, and distorting.

We therefore face a worrying imbalance. Complexity science may well help managers expand the effectiveness, scale, and influence of organizational systems. But with respect to the distortion of information, a crude form of linear reductionism, blame, continues to prevail.

EMERGENCE AND THE DARK SIDE OF ORGANIZATIONAL SYSTEMS

There is a dark side to organizational systems that is widespread and well documented (Vaughan, 1999). Much of this involves the emergence of systemic distortions. After the first shuttle (Challenger) explosion in 1986 (see Vaughan, 1996), Figure 2 and Table 2 were sent to Nobel physicist Richard Feynman, who served on the commission to investigate the accident. Although the model was developed long before the accident without any study of NASA, Feynman (1986) wrote back:

I read Table 2 and am amazed at the perfect prediction of the answers given at the public hearings. I didn't know that anybody understood these things so well and I hadn't realized that NASA was an example of a widespread phenomena.

We agree that general phenomena are involved, emergent phenomena that too often are distorting and destructive. Did the second and more recent shuttle (Columbia) tragedy involve such phenomena? Follow the investigation and judge for yourself.

The tobacco industry serves as an exemplar of our concerns. Here we find global systems that were among the most economically successful and powerful of the twentieth century. They produced addictive products that contributed to the deaths of 400,000 Americans every year. The method presented in this article has been applied to the tobacco industry, illustrating how its economic success was closely tied to systemic and self-propagating distortions that emerged over many decades (Bella, 1997).

The widespread distortions of information in recent years by huge businesses, including the formerly prestigious accounting firm of Arthur Andersen, provide evidence of much more than individual fraud (Toffler, 2003). “Favorable” information (hyping stock, inflating profits, etc.) gushed from these systems while “unfavorable” information (exposing risks, improper accounting, unethical behaviors, etc.) too often went nowhere. The transfers of wealth were enormous; some, who exploited the distortions, made fortunes; many lost savings (Gimein, 2002).

What, then, tends to limit the extent of systemic distortions and their consequences? Our answer reflects a growing understanding of CANL systems in general. The character of CANL systems emerges from the interplay of order (pattern) and disorder (disturbance) over time (Bella, 1997). As an example, forest ecologists tell us that the state of a forest ecosystem reflects its “disturbance regime,” its history of events (fires, storms, etc.) that disturbed emerging patterns. In a similar manner, we claim that the extent of systemic distortion reflects the history of credible disturbances, those compelling events that disrupt more distorting patterns allowing less distorting patterns to emerge.

In human systems, credible disruptions arise in two forms. First, they arise through the independent inquiries, questions, objections, and challenges that people raise. Second, they arise from accidents, explosions, and collapses that the system cannot cover up. Both serve to disrupt distorting patterns, but, clearly, the former are preferable to the latter.

Credible disturbances of the first (human) kind emerge from independent checks and open deliberation. This view leads to a conjecture:

The extent of systemic distortions and their consequences have been, are, and will be inversely proportional to the history of credible disturbances sustained by independent checks and public deliberation not shaped by the systems themselves.

If evidence supports such a conjecture, as we believe it will, then we must take far more seriously the crucial importance of independent checks, including public agencies, and what Eisenhower (1961) called the duties and responsibilities of an “alert and knowledgeable citizenry.” The implications are significant: The market is insufficient, independent public agencies are vital, and higher education must involve far more than economic development and job preparation.

RESPONSIBILITY: BEYOND LINEAR PRESUMPTIONS

Conventional notions of responsibility—and, more generally, notions of good and evil—reflect linear presumptions. This linear attitude can be simply stated: “I'm a responsible person if I act properly, don't lie, cheat, or steal, and do my job in a competent manner.” The problem raised by such common understandings of responsibility is that we see no reason why the individuals of Table 2 would not fit this notion of responsible behavior. Likewise, people tend to reason that evil outcomes arise from evil people: “If the individuals are good (competent, well-intended), then the outcomes should be good.” Such linear perceptions fail to grasp the importance of emergent outcomes. Distorting and even evil outcomes can and do emerge from the actions of individuals, in spite of the fact that each may believe that his or her own behaviors are proper and competently done—those in high positions might honestly say, “There was no attempt to mislead or deceive.” And within their contexts, they may be correct. However, if the context is the problem, then emergence has radical implications for notions of responsibility.

We do not deny the importance of competency, involving actions that are credible, rightly done, and commendable within a given context (game, course, assignment, organizational system, market, etc.). Competency calls for the ability to respond in ways credible within the context. Yet, there is no reason to assume that greater competency would reduce systemic distortions; in fact, the reverse may be true! When the context is the problem, an additional and radically different form of responsibility is required, responsibility that transcends contexts.

The responsibility to transcend context is a universal historical theme in the search for Truth, the pursuit of Justice, and the service of the Sacred. Responding to a calling that transcends context serves to liberate us from our bondage to contexts. Such contexts give us reasons to say, “I just don't have the time,” “It's not my job,” “It won't make any difference.”

The traditional name for a responsibility to transcend context—and which informs the essence of universal intent—is “faith.” Wilfred Cantwell Smith (1977, 1979), who devoted a lifetime of study to the meaning of faith in other ages and cultures, found that faith never meant belief (and especially not belief in spite of evidence to the contrary). Rather, the very meaning of faith has been radically distorted—lost, given up—in our modern age. The point is that faith does not deny the reality of context; faith transcends context. To paraphrase Viktor Frankl (1978), faith “pulls” or “calls” for responsible behaviors from beyond, above, or even “in spite of” the context.

None of this, however, makes sense unless the dangers of contexts themselves become apparent. Distortions and, yes, evil, can and often are emergent outcomes not reducible to the “values” and “beliefs” of individuals. Without an appreciation of the dangers of emergence and the need for responsibility that transcends context, faith becomes reduced to individual beliefs and, more dangerously, the self-reinforcing behaviors of “true believers.”

The method of disciplined sketching proposed herein seeks to expose the dangers of such reductionist thinking. This method, therefore, entails relational self-knowledge—know thy contexts. While such a challenge may be easily voiced, enacting it requires the system to be transcended. In our modern age of organizations, this constitutes a basic moral calling.

EPILOGUE

In 1982 John Naisbitt published a widely acclaimed book, Megatrends. In this book he declared:

In the information society, we have systematized the production of knowledge and amplified our brainpower. To use an industrial metaphor, we now mass-produce knowledge and this knowledge is the driving force of our economy.

In the decades that followed, such declarations became so pervasive that objections to the idea might well be called a heresy of the age. Naisbitt's statement expresses the ideology of the age and, for many, its intellectual paradigm. Nevertheless, in this age we find evidence of information distortions arising from systems that selectively shape information in self-propagating ways. Such distortions, we claim, are emergent phenomena, emerging from patterns of behaviors that provide the contexts within which normal and well-adjusted people are busy at work. We provide a method to sketch such patterns.

To grasp the heretical implications of such claims, change one word in Naisbitt's declaration. Instead of “knowledge,” insert the word “propaganda”—information selectively shaped to propagate the systems that produce it. The declaration now reads:

In the information society, we have systematized the production of propaganda and amplified our brainpower. To use an industrial metaphor, we now mass-produce propaganda and this propaganda is the driving force of our economy.

Clearly, there is a moral difference. Judge for yourself. Has this “information age,” our “knowledge” economy, provided evidence that this alteration of Naisbitt's declaration—and many others—should be taken seriously? We believe it does. But the evidence will not be understood if we persist in linear presumptions, reducing all distortions to individual fraud, a “few bad apples,” or conspiracies to deliberately mislead or deceive. Emergence is real. Distortions emerge. This article presents a method to reveal how distortions emerge from contexts that well-adjusted people take for granted.

References

Bella, D. A. (1987) “Organizations and systemic distortion of information,” Journal of Professional Issues in Engineering, 113: 360-70.

Bella, D. A. (1996) “The pressures of organizations and the responsibilities of university professors,” Bioscience, 46(10): 772-8.

Bella, D. (1997) “Organized complexity in human affairs: The tobacco industry,” Journal of Business Ethics, 16: 977-99.

Eisenhower, D. D. (1961) “Farewell radio and television address to the American people,” Public Papers of the President, Washington, D.C.: U.S. Government Printing Office, 1035-40.

Feynman, R. P (1986) Personal communication to D. A. Bella, June 27.

Frankl, V. (1978) The Unheard Cry for Meaning, New York: Simon & Schuster

Gimein, M. (2002) “You bought, they sold,” Fortune, 146(4): 64-74.

Holland, J. H. (1998) Emergence, Reading, MA: Perseus Books.

Kauffman, S. (1995) At Home in the Universe, New York: Oxford University Press.

Larson, E. W. & King, J. B. (1996) “The systemic distortion of information: An ongoing challenge to management,” Organizational Dynamics, 24 (Winter): 49-61.

Milgram, S. (1974) Obedience to Authority, New York: Harper Perennial.

Milgram, S. (1992) The Individual in a Social World: Essays and Experiments, J. Sabini & M. Silver (eds), New York: McGraw-Hill.

Naisbitt, J. (1982) Megatrends, New York: Warner Books.

Richardson, K. A., Cilliers, P, & Lissack, M. (2001) “Complexity science: A 'gray' science for the 'stuff in between,'” Emergence, 3(2): 6-18.

Smith, W. C. (1977) Belief and History, Charlottesville, VA: University Press of Virginia.

Smith, W. C. (1979) Faith and Belief, Princeton: Princeton University Press.

Stock, G. (1993) Metaman: The Merging of Human and Machines into a Global Superorganism, New York: Simon & Schuster.

Toffler, B. L. (2003) Final Accounting, New York: Broadway Books.

Vaughan, D. (1996) The Challenger Launch Decision, Chicago: University of Chicago Press.

Vaughan, D. (1999) “The dark side of organizations: Mistake, misconduct, and disaster,” Annual Review of Sociology, 25: 271-305.

Waldrop, M. M. (1992) Complexity, New York: Simon & Schuster

Wheatley, M. J. (1992) Leadership and the New Science, San Francisco: Berrett-Koehler.