When Modeling Social Systems, Models ≠ the Modeled:

Reacting to Wolfram's 'A New Kind of Science'

Michael R. Lissack

ISCE, USA

Kurt Richardson

ISCE, USA

Introduction

Much media attention has been directed to the May 2002 release of Stephen Wolfram's A New Kind of Science. What has not been particularly highlighted is that this book is the latest shot in an ongoing dispute between the hard physical sciences and the “soft” social sciences such as sociology, anthropology, and other observation-based studies of human affairs. Wolfram (2002: 9) writes:

One will often have a much better chance of capturing fundamental mechanisms for phenomena in the social sciences by using instead the new kind of science that I develop in this book based on simple programs … Indeed the new intuition that emerges from this book may well almost immediately explain phenomena that in the past have seemed quite mysterious.

He goes on to suggest that “most of the core processes needed for general human-like thinking will be able to be implemented with simple rules … once one has an explicit system that successfully emulates human thinking” (Wolfram, 2002: 629-30). He continues: “no doubt as a practical matter this could be done … by large scale recording of experiences of actual humans” (Wolfram, 2002: 630).1

Central to Wolfram's premises is an all too common mistake: the assertion that by the study of models we can directly study the things, systems, or people being modeled. Despite the promise of massive computer simulations and the development of “intelligent agents,” tests or studies of computer models, be they cellular automata or quantum computing, remain just that—and are not the direct study of the people or social systems being modeled. John Casti (1999a) has made a similar argument in the pages of New Scientist:

Large-scale, agent-based simulations … are exactly the sort called for by the scientific method … So, for the first time in history, we have the opportunity to create a true science of human affairs.

With each reading of that sentence, alarm bells must sound among all who do field studies on actual people. Wolfram's claim is stronger: “Simple programs constructed without known purposes are what one needs to study to find the kinds of complex behavior we see” (Wolfram, 2002: 1185). “The principle of computational equivalence forces a new methodology based on formal models” (Wolfram, 2002: 1197). If Wolfram and Casti are to be believed, economics and most of social science would acquire new meaning, for theory could be actually tested before some governmental official (well-intentioned or not) puts it into practice. All this despite some commentators' warnings concerning the deep theoretical limitations associated with such approaches (see, e.g., Oreskes et al., 1994; Cilliers, 2000a; Richardson, 2002b, 2002c). If only matters were so straightforward. Casti's rhetoric was far more succinct than Wolfram's 1,200-page tome, but both aim guns at the need for field studies. Such persistent polemics are both dangerous and misguided and therefore need further rebuttal.

In recent years new concepts and methods of modeling have combined with substantial computer power to open up the possibility of using them in the social sciences (see, e.g., Goldspink, 2002). From a practical point of view, modeling social systems should be understood as an extension of the way in which we have always dealt with social systems. Models in the computer are extensions of our thinking processes, which use what we think we know to consider various scenarios to help in choosing a course of action—“models are inspirational rather than containers of truth” (Richardson et al., 2000). Models are also extensions of the stories (novels, etc.) we tell, which help us teach others, particularly children, what we have learned from experience. As Pidd (1996: 122) points out, “models are developed so as to allow people to think through their own positions and to engage in debate with others about possible action” rather than “a proper representation of part of the real world.” From a scientific perspective, models help us understand the logical consequences of specific assumptions that may or may not have a basis in the real world. This helps us, in a limited sense, to validate or refute assumptions, which is important in developing better models.

Here, agent-based models (of the Santa Fe type) need to be distinguished from the cellular automata models of Wolfram. Agent-based models at least claim to be founded on a logic and a narrative. Wolfram makes no such claim for the automata models. This leaves even more pause for how someone—business leader or policy maker—is to interpret the results.

The opportunities given by new modeling methods are sometimes confused with the idea that models of social systems will work in a similar manner to how they work in describing simple mechanical systems, that is, by predicting precise outcomes, or serving as oracles that seem to tell absolute truths. Hereafter in this article this view shall be referred to as the “merely study models” or MSM perspective. There are scientifically based reasons (especially within the complexity community) to expect that models cannot serve us in the way that representationalist MSM proponents believe. Moreover, achieving models that can serve as transparent and effective aids to decision making requires substantial effort. Learning to use models wisely is as important a part of this process as developing them: “[M]odels are tools that can be used and abused—the best models are worthless in linear hands” (Richardson, 2002b).

Today MSM proponents have the upper rhetorical hand. But the belief in such naïve rhetoric contributed mightily to the financial mess created by the collapse of Long-Term Capital Management in 1999 and to the assorted dislocations caused by rigid adherence to the IMF's “scientific” models. Even worse, the rhetoric suggests that research funding would be “better spent” on perfecting computer simulations rather than on field studies of actual humans. Despite the widespread demonstrable failures of computer simulation in informing effective decision making, many organizational OA (operational analysis) departments still strive for bigger and better, all-embracing computer models. The illusion of knowledge-based certainty that always accompanies such methods is seemingly preferable to accepting and managing the real world's inherent uncertainty; we seem to prefer a false sense of security over “an awareness of the contingency and provisionality of things” (Cilliers, 2000b).

When used wisely, models provide a forum for dialog and discourse—between expectations and results, between model and observation, between versions of an ever-being-revised model. Central to that dialog is the recognition that a model without observations of the modeled is a monolog with no audience. The risk of the Wolfram position is that “decision makers” will provide that monolog with a rapt audience and will fail to question the absence of dialogic elements necessary for proper decision making. Merely to study models—without concern for the observed or the modeled—suggests a positivistic belief in the powers of prediction and self-fulfilling cause that are foolish when applied to the “natural sciences” and dangerous when applied to the social sciences.

THE RHETORIC

Wolfram has become the most recent, and John Casti perhaps the most vigorous, proponent of the misconception that the study of models is the study of people (a view labeled herein “strong MSM”). But they have many compatriots. The strong MSM of Wolfram, Casti, and others of their ilk would be well placed if either science or the prediction business were solely concerned with the abstract behavior of large numbers of abstract people. Indeed, this type of science has its place. The issue of studying individuals and their behaviors can be supplanted by the concept of studying large groups and generating descriptive information about the average behavior, deviations from that average, and similar measures of the movement of the group through possibility space. Nevertheless, it is one thing to assert a place for simulations and modeling, and another to assert that such models hold the answers that actual observation cannot supply.

Despite Oreskes et al.'s (1994) masterly critique of the power of rulebased simulation, Wolfram writes, “Systems with extremely simple rules can produce behavior that seems to us immensely complex” (Wolfram, 2002: 735); “… and that look like what one sees in nature. And I believe that if one uses such systems it is almost inevitable that a vast amount … will be possible” (Wolfram, 2002: 840). Casti, writing in 1999, prefigured and amplified these thoughts:

I feel strongly that it would be wrong to exclude many important complex systems from the possibility of being explored in simulated worlds just because people are part of a system's complex interactions. It's perhaps because I am a mathematician and modeler, rather than a social scientist, that I disagree with the belief that it is impossible to develop any kind of theory about social systems because people are too complex and unfathomable to be encapsulated in equations and rules. The power and versatility of modern computers now makes it possible to use artificial worlds to test out theories about social and behavioral systems which will enable us to get closer to identifying what rules actually drive the relationships between human agents and other system entities.2

Most scientists doubt that; for example, the kinds of issues usually found to be fundamentally important to business—intuition, creativity, response to uncertainty, response to unpredictable events, and so on—are not what get modeled, but are what drive business.

Casti and co. have a further misconception on which their strong MSM is based, namely, the concept that people are rule-following creatures:

From a philosophical perspective, it is reasonable to postulate that the behavior of human agents in a system can be interpreted as being generated by rules, even if the people do not explicitly invoke rules when they act and find it difficult to define such rules if you ask them. (Casti, 1999b)

The echoes of Wolfram's simple rule-following systems are obvious; the difference is that Casti proclaims that such rules are based on logic. Wolfram makes no such claim. Many strong MSMers overstate a much weaker claim made by John Holland (the father of genetic algorithms), who argues that we can often learn by treating humans as if they are rule-following creatures. The MSM perspective discounts the notion that we exhibit behaviors and that if pushed to explain them we might often explicate a “rule” that finds greater truth in the exception than in the practice. Wolfram throws away claims about such inferred logical rules and begins with his own notion of simple rules. He continues:

Inevitably we tend to notice only those features that somehow fit into the whole conceptual framework we use. And insofar as that framework is based even implicitly upon traditional science it will tend to miss … traditional logic is in fact in many ways very narrow compared to the whole range of rules based on simple programs. (Wolfram, 2002: 843)

If humans were indeed like subatomic particles, individual yet not distinguishable, these modeling notions might hold (see Pesic, 2002, for much more on this). However, while a view of human behavior may work when modeling crowds, traffic, or the stock market (see Wolfram, 2002: 1014), it denies the very essence of being human and of the narratives making up our varied identities. As Pesic writes:

In the story of individuality, contrasting visions confront each other. The individuality of each person and macroscopic object is unique, like Hector's shining helmet. Yet that helmet, like each of us, is made of electrons that blend and merge. The world as we experience it calls us to reconcile these views; vast numbers of identical beings can form structures whose complex configurations give them the appearance of uniqueness. Here we return to the question … [of] whether the individuality of persons really touches the individuality of things. I may be like the ship of Theseus, a phantom haunting itself, for on the atomic level I have no individuality. (Pesic, 2002: 148)

But as Steven Levy (2002) writes of Wolfram in Wired:

Basically, he's saying that all we hold dear—our minds, if not our souls— is a computational consequence of a simple rule. “It's a very negative conclusion about the human condition,” Wolfram admits. “You know, consider those gas clouds in the universe that are doing a lot of complicated stuff. What's the difference [computationally] between what they're doing and what we're doing? It's not easy to see.”3

So much for the notions of purpose, narrative, or emotions.

“Scientists,” Wolfram told Business Week (May, 2002), “should be striving to uncover the underlying simplicity—not just searching for explanations by carving complex phenomenon into smaller and smaller, more digestible pieces.” MSM proponents claim to have found such underlying explicants. The strong MSM proponents look at the results of a model, find an analogy in human (or other) behavior, and proclaim that the analogy proves that the underlying model is “simplicity.” Wolfram makes the following point repeatedly:

Whenever a phenomenon is encountered that seems complex it is taken almost for granted that the phenomenon must be the result of some underlying mechanism that is itself complex. But my discovery that simple programs can produce great complexity makes it clear that this is not in fact correct. (Wolfram, 2002: 4)

Yet, as celebrated Harvard psychologist Jerry Kagan (2002: 17) notes, “Unfortunately function does not reveal form.” Nor vice versa, at least when human behavior is concerned. Just because simple rules can produce complex behavior does not mean that all complex behavior can be explained by simple rules (Oreskes et al., 1994).

Stephen Lansing addresses this concern in a recent Santa Fe Institute working paper (2002). Lansing writes of the “limitations of a social science methodology based on descriptive statistics,” and goes on to quote Epstein and Axtell (1996):

What constitutes an explanation of an observed social phenomenon? Perhaps one day people will interpret the question, “Can you explain it?” as asking “Can you grow it?” Artificial society modeling allows us to grow social structures in silico demonstrating that certain sets of microspecifications are sufficient to generate the macrophenomena of interest.

Lansing wisely notes, “one does not need to be a modeler to know that it is possible to grow nearly anything in silico without necessarily learning anything about the real world.”

The act of interpreting differs from the act of observing, and both may differ significantly from the underlying phenomenon being observed. In their failure to respect this distinction, strong MSM proponents are implicitly suggesting that the interpretation is reality. However, while a good model of complex systems can be extremely useful, it does not “allow us to escape the moment of interpretation and decision” (Cilliers, 2000a). Most well-informed followers of the philosophy and history of science would recognize this perspective for what it is: social constructionism (see Berger & Luckmann, 1966 and Hacking, 1999). The constructionist stance, which underlies the MSM viewpoint, seems to be deliberately overlooked by its followers (who as rhetorical positivists would be forced to reject it).

MSM takes a social constructionist stance for the very reason that Ian Hacking, in his brilliant book The Social Construction of What (1999), suggests underlies all forms of such an argument, namely an objection to the idea that “in the present state of affairs, X is taken for granted, X is inevitable … [but] X need not be at all as it is.” Note that Hacking restricts X to ideas. In this case X is the understanding that human interactions are complex, situated, subject to multiple interpretation, and usually unique. How much simpler the world would be if only we could see and understand that such complexity is but a figment of our minds.

Herein lies the second risk to scientific endeavors posed by proponents of the strong MSM perspective. To the extent that the strong MSMers are believed, decision makers will rely on their simplifying models and analogies. And, over time, the models will be proven wrong. Given enough disconfirming experiences, the general public will reject modeling and simulation as a tool, and both funding and exposure to interesting problems will dry up. In an era where Long-Term Capital Management's founders can still argue with a straight face that “it was the world not our model” (and have the nerve to operate yet another hedge fund), the rhetorical risks posed by MSM are mighty indeed.

Furthermore, MSM proponents often claim that “simulation is changing the frontiers of science,” just as Wolfram proclaims the study of automata to be “a new kind of science.” If the frontiers of science were only to be found in computer laboratories, perhaps this claim might have merit, but the many scientists doing nonsimulation-based work have every reason to be skeptical. In this regard, the popular press has greatly overplayed the potential value of the computer simulation-based approach of the Santa Fe Institute, among others, with regard to complex systems research. Complex systems thinking is more than merely simulations and their results. Social science is more than any model can deliver, let alone just computer models. MSM's claims to the contrary are biased at best and pose a risk to the funding of basic research for all engaged in the actual observation of human affairs. The mere fact that a computer simulation can be run does not substitute for a study of human behavior in practice. Nevertheless, try convincing funding sources of that stance while Wolfram, Casti, and others are proclaiming the “virtues” of simulation models for “advancing science” and the popular press is lending credence to the primacy of such a view.

MODELING REDEEMED

Both Suppe (1989) and van Fraassen (1980) suggest that

a scientific theory is an attempt to either isolate or idealize a system—usually a physical system—in such a way that its dynamics can be reduced to a manageable number of variables (each of which is usually represented by a theoretical term) related by a mathematical description, so that the model generates a restricted number of likely outcome states. It is often neutral with respect to many of the attributes of the entities and processes it covers (Wilkins, 1996).

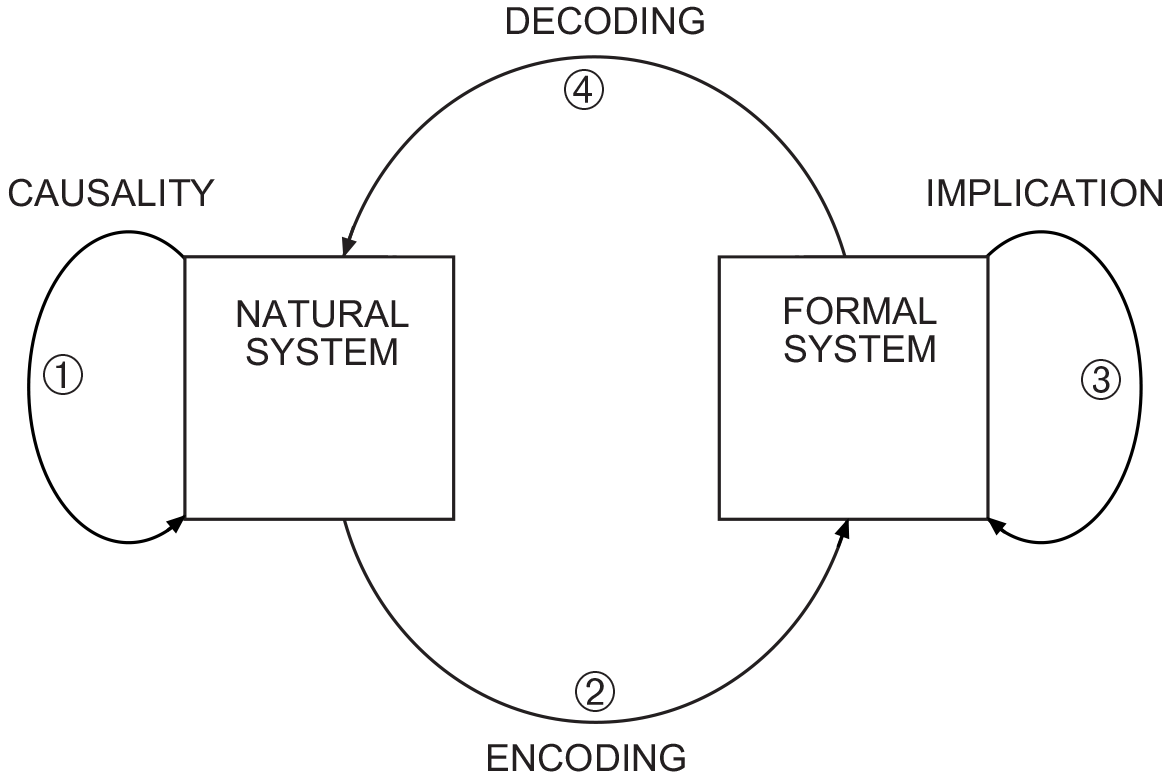

The very complexities of human affairs suggested above are examples of the types of attributes and processes about which a scientific theory may be neutral in its efforts to describe abstract and group phenomena. The act of isolating or idealizing has been termed by Rosen, among others, as the “modeling relation.” The features of this widely known relation are shown in Figure 1.

The model occupies the right-hand side of the picture. The observable real-world system (whatever it may be) occupies the left. In between lies the world of the observer, who not only makes observations about the real-world system but also interprets what those observations imply for the model and what the outcomes of running the model might mean in the real world. The observer has the key role in the modeling relation.

Figure 1 Two systems, a natural system and a formal system related by a set of arrows depicting processes and/or mappings. The assumption is that when we are “correctly” perceiving our world, we are carrying out a special set of processes that this diagram represents. The natural system represents something that we wish to understand. Arrow 1 depicts causality in the natural world. On the right is some creation of our mind or something our mind uses in order to try to deal with the observations or experiences that we have. Arrow 3 is called “implication” and represents some way in which we manipulate the formal system to try to mimic causal events observed or hypothesized in the natural system on the left. Arrow 2 is some way that we have devised to encode the natural system, or, more likely, select aspects of it (having performed a measurement as described above), into the formal system. Finally, arrow 4 is a way that we have devised to decode the result of the implication event in the formal system to see if it represents the causal event’s result in the natural system. (Adapted from Mikulecky (n.d.).)

The modeling relation is intimately familiar to nearly all of us, despite our unfamiliarity with its label. If we were to substitute the word “novel” for “model” and “writer and reader” for “observer,” the relation would neatly capture the world of fiction. Similar substitutions can be made with screenplay, writer and movie-goer, or with art work, artist and viewer. Mathematical models are not of a different type, they merely use a different form of expression. Notice, however, that we are much more comfortable recognizing the “model” aspects of a play, a movie, or a novel than we are about flu shot warnings, a consumer price index report, or the weather.

An important aspect of models is the indexicality of their subject. Indexicality is the quality of being able to serve as a “stand-in,” as a generic variable. Indexicals derive their meaning from an interaction with their contexts and situatedness. The greater the indexicality of the subject, the more likely it is that multiple observers will reach similar conclusions from an examination of both model and modeled, and that by abduction and induction the results of a model will be socially accepted as “facts” about the modeled. When the real-world system has indexicality, it is easier to accept the indexicality of the necessarily simpler model. When the real-world system, by contrast, has individuality, the indexicality of the model becomes a limitation that tends to restrict the validity of the model to group behaviors, provided that the law of large numbers (itself an indexical model) applies. Thus, we are better able to accept modeling results concerning atoms (which are highly indexical) than modeling results concerning ourselves (whom we think of as individual and not indexical). Both horoscopes and Myers-Briggs tests serve to replace our individuality with indexicals (Capricorns and INTJs). Wolfram replaces our individuality with simple programs. The agent-based models of which Casti, Holland, and other strong MSM types are so fond replace our individuality with other indexicals, namely agents.

In developing our understanding of social systems, indexical models are useful for describing group characteristics. At the level of an indexical group, computer simulation models can contribute to understanding what is not available from the study of individuals. For example, in a Santa Fe Institute study of firms charged with improving their technology, Jose Lobo and William Macready (1999) found

that early in the search for technological improvements, if the initial position is poor or average, it is optimal to search far away on the technology landscape; but as the firm succeeds in finding technological improvements it is optimal to confine search to a local region of the landscape.

Such a finding does not purport to tell any particular firm what to do, but instead describes a general finding with respect to indexical firms.

Similarly, in another Santa Fe Institute study by Levitan et al. (1999), it was found that

optimal group size relates to the magnitude of externalities and the length of the search period. Our main result suggests that for short search periods, large organizations perform best, while for longer time horizons, the advantage accrues to small sized groups with a small number of (but not no) externalities. However, over these long time horizons, as the extent of externalities increases, modest increases in group size enhances performance.

Again, no specific group size recommendation is forthcoming, merely findings about indexical groups.

Explicating such findings and allowing decision makers to think through their implications are perhaps the most valued contribution that such models can make. There is no need for the models in question to have predictive power, despite the strong desire of both consultants and their clients that such models “work.” The pedagogical value of exploring the interactions of complex relations through the manipulation of models is more than enough to justify the efforts that go into model development and proliferation (Bankes, 1993). Clearly, it is easier to manipulate a computer model than a fully fledged “in reality” laboratory experiment, but the limitations of such models must be remembered. Although the research presented in Kagel et al.'s (1995) Economic Choice Theory: An Experimental Analysis of Animal Behavior found that animal experiments can confirm economic precepts, those same precepts are often confounded by individual humans. As with the laboratory experiments, so too with the computer models.

Social science modeling is not new. What is new is the amount of computational power that can be devoted to the models. There is, however, no proven relation between the amount of devoted computer power and the model results. Apollo reached the moon with less computer power than is on your desktop, and history is filled with examples of powerful writing that seem to have changed the attitudes and actions of nations. The latter case illustrates the power of a well-drawn indexical—when readers can self-identify with what they are reading, the story is far more potent.

Lansing (2002) suggests that

computers offer a solution to the problem of incorporating heterogeneous actors and environments and nonlinear relationships (or effects). Still, the worry is that the entire family of such solutions may be trivial, since an infinite number of such models could be constructed. The missing ingredient is a method for attaching objective probabilities to social outcomes … global patterns, which in turn constrain the future behavior of individuals … Lewontin (2000) proposes an alternative, “niche construction” to emphasize the active role of organisms in constructing their own environments.

Strong MSM creates confusion by claiming that models can be used instead of experiments on the real world. When you look at a photograph of a person, are you looking at the person? Nevertheless, we suspect that you have photographs in your house. Obviously, there are many things to think about, such as what is the nature of a picture as a two-dimensional, wrong-sized, static, approximate visual representation, or even the possibility that the picture was falsified. Another way to say this is that models are partial truths: They partially reflect some aspects of reality. Good models have well-defined relationships to reality so that we know how and when to use them. This means that we recognize which aspects of the model are related to which aspects of reality. This is not a piece-by-piece correspondence, but a behavior-by-behavior correspondence. Our use of models is clearly not only a property of the model, but a property of our (incomplete) understanding of the relationship between the model and reality.

Agent-based models are indexical for real-world situations that are rule based in action, not in social construction. It is not enough to argue, as MSM does, that we should socially construct “the rules” merely because we have the ability to articulate “candidate rules.” Chris Langton offered a contrasting view of the utility of simulation models many years ago, when he said that agent-based simulations can show you a family of trajectories for how models might evolve. It is useful to see the envelope of those trajectories. There is a role for simulation in many areas of business operations such as improving manufacturing, transportation, or scheduling processes. And, like many a stock market model, simulation may be, in John Holland's (1998) terms, “a prosthesis for the imagination.” It may be possible, in looking at a model, to figure out a rule that applies both to the model and to the real world. Such a rule may be very hard to figure out from only studying the real world, yet it may be obvious in hindsight that the rule is correct. The risk lies in the proclamation that simulations can do what observations cannot—that is, to provide “a true science of human affairs.”

Economics has shown that basing theory or models on “rational economic man” is a good teaching tool but a dangerous fallacy on which to rely in practice. As Raymond Miles, a leading management academic, has been heard to quip, “An economist is someone who has never seen a human being but has had one described to him.” Clearly, transportation system management, supply chain logistics, inventory control, and manufacturing processes lend themselves to rule-based computer simulation approaches. However, family relationship management does not, except, perhaps, at the scale of very large groups. Indexicals must be treated as the stand-ins that they are. Models will reflect the indexicals that they model.

Indexicality is not an often-discussed property of models, which is why it is stressed above. This does not mean to dismiss other issues such as the roles played by assumptions, relevant parameters, sensitivity, complexity, or the environment surrounding the system being modeled. However, the assertions of the strong MSM proponents, their denial of the import of indexicality, and the potential risks of a backlash against the overstated MSM claims all demand that indexicality be surfaced as an issue.

In their forthcoming book Converging on Coherence (2003), Lissack and Letiche deal extensively with the role of indexicals and indexicality in organizations. Indexicality is a powerful phenomenon, but indexicals have only a limited carrying capacity. Their ability to absorb and reflect a multitude of contexts is constrained. Models and interpretations of models that fail to reflect such constraints can only lead to poor predictions.

USING MODELS WISELY

This article should not be construed as arguing against making appropriate use of models, formal, computer driven, simulation, or automata. Models are helpful tools in exploration, as logical aids, and in thinking. For example, grocery retailer Sainsbury's has publicly noted with regard to its SimStore model:

We found that just leaving the software with a business analyst stimulated a great deal of interest. It seems to be an excellent aid for analysts who start out with a hunch and want to find out where it could lead.4 (Casti 1999a, 1999b)

If we are to follow the physical sciences, then we are off on a path perhaps described best by Leduc's 1910 treatise “Physico-Chemical Theory of Life and Spontaneous Generation,” whereby the standard “course of development of every branch of natural science” begins with “observation and classification.” “The next step,” however, “is to decompose the more complex phenomena in order to determine the physical mechanism underlying them.” “Finally,” he writes, “when the mechanism of a phenomenon is understood, it becomes possible to reproduce it, to repeat it by directing the physical forces which are its cause” (Leduc, 1910: 113, cited in Fox-Keller, 2002). This is the goal of a dialog of models. It is unclear how mechanisms can be understood and cause to be exploited if all we look at are models. As Hogarth (2002: 141) writes:

Humans learn about the world from two sources: what others tell them and their own experiences. Moreover, there is strong interaction between these two sources. What other people say can direct what people experience, and what people experience can affect how they interpret what they have been told.

Bruner (2002: 27) continues:

Narrative, including fictional narrative, gives shape to things in the real world and often bestows on them a title to reality … we cling to narrative models of reality and use them to shape our everyday experiences.

In his work on principle-centered leadership, Stephen Covey (1991) identified four characteristics that are true of all organizations:

- They are holistic. There is a “big picture.”

- They are ecological. Everything is interdependent, and actions taken in one area are likely to have effects (intended or not) on others.

- They are organic. They develop over time, according to natural processes, based on how (and how well) they are nurtured.

- They are people based. Compelling results come from the actions of motivated people worked together toward a shared purpose.

If modeling is to produce a “true science of human affairs,” then the model must truly reflect the modeled. Perhaps agents and simulations can reflect the first three of Covey's characteristics, but they are unlikely ever to reflect the fourth. A science of human affairs will only come from the study of humans not of models, except in so far as the models and their partiality focus the researchers' attention on the tensions between concept and experience, idea and circumstance, and abstraction and “lived reality.”

Models have their purpose and their place. They are useful tools for thinking (Pidd, 1996) and even more useful pedagogues. Models can be used to develop an understanding of constraints (Bankes, 1994; Juarrero, 1999) and of their interplay in Lewontin's notion of “niche construction.” Let us not oversell what they cannot do. Overselling is the first step to a failed fad. Modeling is a serious business and not a fad. With his overstated and narcissistic claims, Wolfram has not done his readers or the greater scientific community a service by helping to popularize further a misguided notion of what computer models can do. As Helmreich (1999, 256) warns:

The use and abuse of computer simulations bears watching—especially in situations where there is a notable power differential between those putting together the simulation and those whose lives are the subjects and objects of these simulations.

Overselling the value of computer simulations and cellular automata is a direct threat to the funding of observation-based studies of human behavior. The overselling of such simulations, while pandering to decision makers' misguided requirements, leads to unsupportable claims of prediction with regard to human affairs. From such claims only failure can emerge. The price of a backlash is one that the scientific community as a whole, and complex systems in particular, cannot afford. We must continue to study real people, no matter how attractive the study of computer simulations or cellular automata may be. Science will regress if its funders feel otherwise. Neither computer simulations nor cellular automata are substitutes for people.

NOTES

- The interested reader might find Richardson (2002a) of interest, which starts from a similar starting point to Wolfram but leads to some very different conclusions about any science whether it is new or not.

- We would not deny that computer simulation offers a new and exciting lens through which to contemplate organizational behavior, but such an approach will never lead to a “Theory of Social Systems.” If such a theory is one day developed it will be multifaceted, combining methods that yield both what Wilbur (1996: 74) refers to as interior (subjective) and exterior (objective) knowledge. Computer simulation will have a place in such a patchwork of theories, but we need to be honest about the extent to which such approaches can provide practical understanding.

- Note that Richardson (2002) comes to a similar conclusion to Wolfram concerning the human condition, but points out that such determinism is quite opaque to mere mortals, which seriously undermines the value of Wolfram's observation. Consciousness may well be an illusion, but it is an illusion we will never observe as such—thankfully!

- Regarding models in this way is not a particularly new insight. However, given the rather linearly inspired prevailing organizational cultures, models are still regarded in a representationalist sense. Organizational users of models demand accuracy despite the unreasonableness of the requirement. Given their role in the linear decision process, models are all too quickly assumed to be accurate depictions of reality. So, though the provisionality and contingency of all models is well known, popular culture persists in utilizing them as if they were more than they are.

References

Bankes, S. (1993) “Exploratory modeling for policy analysis,” Operations Research , 41(3): 435-49.

Bankes, S. (1994) “Computational experiments in exploratory modeling,” Chance, 7(1): 50-51, 57.

Berger, P & Luckman, T. (1966) The Social Construction of Reality: A Treatise in the Sociology of Knowledge, New York: Anchor Books.

Bruner, J. (2002) Making Stories: Law, Literature, Life, New York: Farrar, Straus & Giroux.

Casti, J. (1999a) “Firm forecast,” New Scientist, 24 April: 42-6.

Casti, J. (1999b) “BizSim,” Complexity, 4(4).

Cilliers, P (2000a) “Rules and complex systems,” Emergence, 2(3): 40-50.

Cilliers, P (2000b) “What can we learn from a theory of complexity?,” Emergence, 2(1): 23-33.

Covey, S. (1991) Principle Centered Leadership, New York: Summit Books.

Epstein, J. & Axtell, R. (1996) Growing Artificial Societies: Social Science from the Bottom Up, Cambridge, MA: MIT Press.

Fox-Keller, E. (2002) Making Sense of Life: Explaining Biological Development with Models, Metaphors, and Machines, Boston: Harvard University Press.

Goldspink, C. (2002) “Methodological implications of complex systems approaches to sociality,” Journal of Artificial Societies and Social Simulation, 5(1), http://jasss.soc.surrey.ac.uk/5/1/3.html.

Hacking, I. (1999) The Social Construction of What, Boston: Harvard University Press.

Helmreich, S. (1999) “Digitizing development: Balinese water temples, complexity, and the politics of simulation,” Critique of Anthropology, 19(3): 249-66.

Hogarth, R. (2002) Educating Intuition, Chicago: University of Chicago Press.

Holland, J. (1998) Emergence: From Chaos to Order, Cambridge, MA: Perseus Press.

Juarrero, A. (1999) Dynamics in Action: Intentional Behavior as a Complex System, Cambridge, MA: MIT Press.

Kagan, J. (2002) Surprise, Uncertainty, and Mental Structures, Boston: Harvard University Press.

Kagel, J.H., Battalio, R.C., & Green, L. (1995) Economic Choice Theory: An Experimental Analysis of Animal Behavior, Cambridge, UK: Cambridge University Press.

Lansing, J.S. (2002) 'Artificial societies and the social sciences,” Santa Fe Institute Working Paper 02-03-011, Santa Fe, NM.

Levy, S. (2002) “The man who cracked the code to everything,” Wired, 10 June.

Levitan, B., Lobo, J., Kauffman, S., & Schuler, R. (1999) “Optimal organization size in a stochastic environment with externalities,” Santa Fe Institute Working Papers 99-04-024, Santa Fe, NM.

Lewontin, R. (2000) It Ain't Necessarily So: The Dream of the Human Genome and Other Illusions, New York: New York Review Books.

Lissack, M. & Letiche, H. (2003) Converging on Coherence, Cambridge, MA: MIT Press, forthcoming.

Lobo, J. & Macready, W. (1999) “Landscapes: A natural extension of search theory,” Santa Fe Institute Working Papers 99-05-037, Santa Fe, NM.

Mikulecky, D.C. (n.d.) The Modeling Relation: How We Perceive, http://views.vcu.edu/~mikuleck/modrelisss.html.

Oreskes, N., Shrader-Fechette, K. & Belitz, K. (1994) “Verification, validation, and confirmation of numerical models in the earth sciences,” Science, 263: 641-6.

Pesic, P (2002) Seeing Double, Boston: MIT Press.

Pidd, M. (1996) Tools for Thinking: Modelling in Management Science, Chichester: Wiley.

Richardson, K.A. (2002a) “The hegemony of the physical sciences: An exploration in complexity thinking,” in PM. Allen (ed.), Living with Limits to Knowledge, forthcoming, Available at http://www.kurtrichardson.com/KAR_Hegemony.pdf.

Richardson, K.A. (2002b) “Methodological implications of complex systems approaches to sociality: Some further remarks,” Journal of Artificial Societies and Social Simulation, 5(2), http://jasss.soc.surrey.ac.uk/5/2/6.html.

Richardson, K.A. (2002c) “On the limits of bottom-up computer simulations: Towards a nonlinear marketing culture,” Proceedings of the 36th Hawai'i International Conference on System Sciences, forthcoming.

Richardson, K., Mathieson, G., & Cilliers, P (2000) “The theory and practices of complexity science: Epistemological considerations for military operational research,” SysteMexico, 1(1): 25-66, available at http://www.kurtrichardson.com/milcomplexity.pdf.

Suppe, F. (1989) The Semantic Conception of Theories and Scientific Realism, Champaign, IL: University of Illinois Press.

van Fraassen, B. (1980) The Scientific Image, Oxford, UK: Oxford University Press.

Wilbur, K. (1996) A Brief History of Everything, Dublin: Newleaf.

Wilkins, J. (1996) “The evolutionary structure of scientific theories,” Biology and Philosophy, 13: 479-504.

Wolfram, S. (2002) A New Kind of Science, Champaign, IL: Wolfram Media.