Complexity Theory in Organization Science:

Seizing the Promise or Becoming a Fad?

Bill McKelvey

UCLA, USA

Over the past 35 years complexity theory has become a broad-ranging subject that is appreciated in a variety of ways, illustrated more or less in the books by Anderson, Arrow, and Pines (1988), Nicolis and Prigogine (1989), Mainzer (1994), Favre et al. (1995), Belew and Mitchell (1996), and Arthur, Durlauf, and Lane (1997). The study of complex adaptive systems (Cowan, Pines, and Meltzer, 1994) has become the ultimate interdisciplinary science, focusing its modeling activities on how microstate events, whether particles, molecules, genes, neurons, human agents, or firms, self-organize into emergent aggregate structures.

A fad is “a practice or interest followed for a time with exaggerated zeal” (Merriam Webster's, 1996). Management practice is especially susceptible to fads because of the pressure from managers for new approaches and the enthusiasm with which management consultants put untested organization science ideas into immediate practice. Complexity theory has already become the latest in a long string of management fads, such as T-groups, job enrichment, OD, autonomous work groups, quality circles, JIT inventories, and reengineering. A fad ultimately becomes discredited because its basic tenets remain uncorroborated by a progression of research investigations meeting accepted epistemological standards of justification logic. Micklethwait and Wooldridge (1996) refer to fad-pushing gurus as “witch doctors.”

The problem of questionable scientific standards in organization science is not limited to complexity theory applications (Pfeffer, 1993; McKelvey, 1997). Nevertheless, the application of complexity theory to firms offers another opportunity to consider various epistemological ramifications. The problem is exacerbated because complexity theory's already strong showing in the physical and life sciences could be emasculated as it is translated into an organizational context. Furthermore, the problem takes on a sense of urgency since:

- complexity theory appears on its face to be an important addition to organization science

- it is already faddishly applied in a growing popular press and by consulting firms;1 and

- its essential roots in stochastic microstates have so far been largely ignored.

Thus, complexity theory shows all the characteristics of a shortlived fad.

Clearly, the mission of this and subsequent issues of Emergence is systematically to build up a base of high-quality scientific activity aimed at supporting complexity applications to management and organization science—thereby thwarting faddish tendencies. In this founding issue I suggest a bottom-up focus on organizational microstates and the adoption of the semantic conception of scientific theory. The union of these two hallmarks of current science and philosophy, along with computational modeling, may prevent complexity theory from becoming just another fad.

BOTTOM-UP ORGANIZATION SCIENCE

In their book Growing Artificial Societies, Epstein and Axtell (1996) join computational modeling with modern “bottom-up” science, that is, science based on microstates. A discussion of bottomup organization science must define organizational microstates in addition to defining the nature of aggregate behavior. Particle models rest on microstates. For physicists, particles and microstates are one and the same—the microstates of physical matter are atomic particles and subparticles. For chemists and biologists, microstates are, respectively, molecules and biomolecules. For organization scientists, microstates are defined as discrete random behavioral process events.

So if they are not individuals, what are organizational microstates? Decision theorists would likely pick decisions. Information theorists might pick information bits. I side with process theorists. Information bits could well be the microstates for decision science and electronic bytes may make good microstates for information science, but they are below the organizational lower bound and are thus uninteresting to organization scientists. In the hierarchy of sciences—physics, chemistry, biology, psychology, organization science, economics—the lower bound separates a science from the one lower in the hierarchy. Since phenomena in or below the lower bound—termed microstates—are outside a particular science's explanatory interest, platform assumptions are made about whether they are “uniform” as in economists' assumptions about rational actors, or “stochastic” as in physicists' use of statistical mechanics on atomic particles or kinetic gas molecules. Sciences traditionally adopt the convenience of assuming uniformity early in their life-cycle and then later drift into the adoption of the more complicated stochastic assumption.

Process theorists define organizational processes as consisting of multiple events. Van de Ven (1992) notes that when a process as a black box or category is opened up it appears as a sequence of events. Abbott (1990) states: “every process theory argues for patterned sequences of events” (p. 375). Mackenzie (1986, p. 45) defines a process as “a time dependent sequence of elements governed by a rule called a process law,” and as having five components (1986, p. 46):

- The entities involved in performing the process;

- The elements used to describe the steps in a process;

- The relationships between every pair of these elements;

- The links to other processes; and

- The resource characteristics of the elements.

A process law “specifies the structure of the elements, the relationships between pairs of elements, and the links to other processes” and “a process is always linked to another, and a process is activated by an event” (Mackenzie, 1986, p. 46). In his view, an event “is a process that signals or sets off the transition from one process to another” (1986, pp. 46-7).

Mackenzie recognizes that in an organization there are multiple events, chains of events, parallel events, exogenous events, and chains of process laws. In fact, an event is itself a special process. Furthermore, there exist hierarchies of events and process laws. There are sequences of events and process laws. The situation is not unlike the problem of having a Chinese puzzle of Chinese puzzles, in which opening one leads to the opening of others (1986, p. 47). Later in his book, Mackenzie describes processes that may be mutually causally interdependent. In his view, even smallish firms could have thousands of process event sequences (1986, p. 46).

As process events, organizational microstates are obviously affected by adjacent events. But they are also affected by broader fields or environmental factors. While virtually all organization theorists study processes—after all, organizations have been defined for decades as consisting of structure and process (Parsons, 1960)—they tend to be somewhat vague about how and which process events are affected by external forces (Mackenzie, 1986). An exception is Porter's (1985) value chain approach, where what counts is determined directly by considering what activities are valuable for bringing revenue into the firm.

Those taking the “resource-based view” of strategy also develop the relationship between internal process capabilities and a firm's ability to generate rents, that is, revenues well in excess of marginal costs. These attempts to understand how resources internal to the firm act as sustainable sources of competitive advantage are reflected in such labels as the “resource based-view” (Wernerfelt, 1984), “core competence” (Prahalad and Hamel, 1990), “strategic flexibilities” (Sanchez, 1993), and “dynamic capabilities” (Teece, Pisano, and Schuen, 1994).

Those studying aggregate firm behavior increasingly have difficulty holding to the traditional uniformity assumption about human behavior. Psychologists have studied individual differences in firms for decades (Staw, 1991). Experimental economists have found repeatedly that individuals seldom act as uniform rational actors (Hogarth and Reder, 1987; Camerer, 1995). Phenomenologists, social constructionists, and interpretists have discovered that individual actors in firms have unique interpretations of the phenomenal world, unique attributions of causality to events surrounding them, and unique interpretations, social constructions, and sensemakings of others' behaviors that they observe (Silverman, 1971; Burrell and Morgan, 1979; Weick, 1995; Reed and Hughes, 1992; Chia, 1996). Resource-based view strategists refer to tacit knowledge, idiosyncratic resources, and capabilities, and Porter (1985) refers to unique activities. Although the fieldlike effects of institutional contexts on organizational members are acknowledged (Zucker, 1988; Scott, 1995), and the effects of social pressure and information have a tendency to move members toward more uniform norms, values, and perceptions (Homans, 1950), strong forces remain to steer people toward idiosyncratic behavior in organizations and the idiosyncratic conduct of organizational processes:

- Geographical locations and ecological contexts of firms are unique.

- CEOs and dominant coalitions in firms are unique—different people in different contexts.

- Individuals come to firms with unique family, educational, and experience histories.

- Emergent cultures of firms are unique.

- Firms seldom have totally overlapping suppliers and customers, creating another source of unique influence on member behavior.

- Individual experiences within firms, over time, are unique, since each member is located uniquely in the firm, has different responsibilities, has different skills, and is surrounded by different people, all forming a unique interaction network.

- Specific firm process responsibilities—as carried out—are unique due to the unique supervisor-subordinate relationship, the unique interpretation an individual brings to the job, and the fact that each process event involves different materials and different involvement by other individuals.

Surely the essence of complexity theory is Prigogine's (Prigogine and Stengers, 1984; Nicolis and Prigogine, 1989) discovery that:

- “At the edge of chaos” identifiable levels of imported energy, what Schrödinger (1944) terms “negentropy,” cause aggregate “dissipative structures” to emerge from the stochastic “soup” of microstate behaviors.

- Dissipative structures, while they exist, show predictable behaviors amenable to Newtonian kinds of scientific explanation.

- Scientific explanations (and I would add epistemology) most correctly applicable to the region of phenomena at the edge of chaos resolve a kind of complexity that differs in essential features from the kinds of complexity that Newtonian science, deterministic chaos, and statistical mechanics attempt to resolve (Cramer, 1993; Cohen and Stewart, 1994).

None of these contributions by Prigogine may sensibly be applied to organization science without a recognition of organizational microstates.

THE IMPORTANCE OF THE SEMANTIC CONCEPTION

Philosophers differentiate entities and theoretical terms into those that:

- are directly knowable via human senses;

- may be eventually detectable via further development of measures; or

- are metaphysical in that no measure will allow direct knowledge of their existence.

Positivists tried to solve the fundamental dilemma of science—How to conduct truth-tests of theories, given that many of their constituent terms are unobservable and unmeasurable, seemingly unreal terms, and thus beyond the direct first-hand sensory knowledge of investigators? This dilemma clearly applies to organization science in that many organizational terms, such as legitimacy, control, bureaucracy, motivation, inertia, culture, effectiveness, environment, competition, complex, carrying capacity, learning, adaptation, and the like, are metaphysical concepts. Despite years of attempts to fix the logical structure of positivism, its demise was sealed at the 1969 Illinois symposium and its epitaph written by Suppe (1977), who gives a detailed analysis of its logical shortcomings.

It is clear that positivism is now obsolete among modern philosophers of science (Rescher, 1970, 1987; Devitt, 1984; Nola, 1988; Suppe, 1989; Hunt, 1991; Aronson, Harré, and Way, 1994; de Regt, 1994). Nevertheless, the shibboleth of positivism lingers in economics (Blaug, 1980; Redman, 1991; Hausman, 1992), organization science (Pfeffer, 1982, 1993; Bacharach, 1989; Sutton and Staw, 1995; Donaldson, 1996; Burrell, 1996), and strategy (Camerer, 1985; Montgomery, Wernerfelt, and Balakrishnan, 1989). It is still being used to separate “good normal science” from other presumably inferior approaches.

Although the untenable elements of positivism have been abandoned, many aspects of its justification logic remain and have been carried over into scientific realism. Positivism's legacy emphasizes the necessity of laws for explaining underlying structures or processes and creating experimental findings—both of which protect against attempting to explain naturally occurring accidental regularities. They also define a sound scientific procedure for developing “instrumentally reliable” results. Instrumental reliability is defined as occurring when a counterfactual conditional, such as “if A then B,” is reliably forthcoming over a series of investigations. While positivists consider this the essence of science, that is, the instrumental goal of producing highly predictable results, scientific realists accept instrumentally reliable findings as the beginning of their attempt to produce less fallible scientific statements. Elements of the legacy are presented in more detail in McKelvey (1999c).

Three important normal science postpositivist epistemologies are worth noting. First, scientific realists argue for a fallibilist definition of scientific truth—what Popper (1982) terms “verisimilitude” (truthlikeness). Explanations having higher fallibility could be due to the inclusion of metaphysical rather than observable terms, or they could be due to poor operational measures, sampling error, or theoretical misconception, and so forth. Given this, progress toward increased verisimilitude is independent of whether theory terms are observable or metaphysical, because there are multiple causes of high fallibility. This idea is developed more fully by Aronson, Harré, and Way (1994). Second, the semantic conception of theories (Beth, 1961; Suppe, 1977, 1989) holds that scientific theories relate to models of idealized systems, not the complexity of real-world phenomena and not necessarily to self-evidently true root axioms. And third, evolutionary epistemology (Campbell, 1974, 1988, 1995; Hahlweg and Hooker, 1989) emphasizes a selectionist process that winnows out the more fallible theories. Collectively, these epistemologies turn the search for truth on its head—instead of expecting to zero in on an exactly truthful explanation, science focuses on selectively eliminating the least truthful explanations. Elsewhere I elaborate the first and third of these contributions under the label Campbellian Realism (McKelvey, 1999c).

The semantic conception's model-centered view of science offers a useful bridge between scientific realism and the use of computational experiments as a basis of truth-tests of complexity theory-rooted explanations in organization science. Scientific realism builds on a number of points. First, Bhaskar (1975/1997) sets up the model development process in terms of experimentally created tests of counterfactuals, such as “force A causes outcome B,” that protect against accidental regularities. Second, Van Fraassen (1980), drawing on the semantic conception, develops a modelcentered epistemology and sets up experimental (empirical2) adequacy as the only reasonable and relevant “well-constructed science” criterion. Third, accepting the model-centered view and experimental adequacy, Aronson, Harré, and Way (1994) then add ontological adequacy so as to create a scientific realist epistemology. In their view, models are judged as having a higher probability of truthlikeness if they are:

- experimentally adequate in terms of a theory leading to experimental predictions testing out; and

- ontologically adequate in terms of the model's structures accurately representing that portion of reality deemed to be within the scope of the theory at hand.

Finally, de Regt (1994) develops a “strong argument” for scientific realism building on the probability paradigm, recognizing that instrumentally reliable theories leading to highly probable knowledge result from a succession of eliminative inductions.

After Beth (1961), three early contributors to the semantic conception emerged: Suppes (1961, 1962, 1967), Suppe (1967, 1977, 1989), and van Fraassen (1970, 1980). Subsequent interest by Beatty (1981, 1987), Lloyd (1988), and Thompson (1989) in biology is relevant because biological theories, like organization theories, pertain to entities existing in a competitive ecological context. The structure of competition-relevant theories in both disciplines suffers the same epistemological faults (Peters, 1991). Suppes chooses to formalize theories in terms of set-theoretic structure, on the grounds that, as a formalization, set theory is more fundamental to formalization than are axioms.

Instead of a set-theoretic approach, van Fraassen chooses a state space and Suppe a phase space platform. A phase space is defined as a space enveloping the full range of each dimension used to describe an entity. Thus, one might have a regression model in which variables such as size (employees), gross sales, capitalization, production capacity, age, and performance define each firm in an industry, and each variable might range from near zero to whatever number defines the upper limit on each dimension. These dimensions form the axes of a Cartesian space. In the phase space approach, the task of a formalized theory is to represent the full dynamics of the variables defining the space, as opposed to the axiomatic approach where the theory builds from a set of assumed axioms. A phase space may be defined with or without identifying underlying axioms. The set of formalized statements of the theory is not defined by how well they interpret the set of axioms, but rather by how well they define phase spaces across various phase transitions.

Having defined theoretical adequacy in terms of how well a theory describes a phase space, the question arises of what are the relevant dimensions of the space. In the axiomatic conception, axioms are used to define the adequacy of the theory. In the semantic conception, adequacy is defined by the phenomena. The current reading of the history of science by the semantic conception philosophers shows two things:

- Many modern sciences do not have theories that inexorably derive from root axioms.

- No theory ever attempts to represent or explain the full complexity of some phenomenon.

Classic examples given are the use of point masses, ideal gases, pure elements and vacuums, frictionless slopes, and assumed uniform behavior of atoms, molecules, and genes. Scientific laboratory experiments are always carried out in the context of closed systems whereby many of the complexities of natural phenomena are set aside. Suppe (1977, pp. 223-4) defines these as “isolated idealized physical systems.” Thus, an experiment might manipulate one variable, control some variables, assume many others are randomized, and ignore the rest. In this sense the experiment is isolated from the complexity of the real world and the physical system represented by the experiment is necessarily idealized.

A theory is intended to provide a generalized description of a phenomenon, say, a firm's behavior. But no theory ever includes so many terms and statements that it could effectively accomplish this. A theory:

- “does not attempt to describe all aspects of the phenomena in its intended scope; rather it abstracts certain parameters from the phenomena and attempts to describe the phenomena in terms of just these abstracted parameters” (Suppe, 1977, p. 223);

- assumes that the phenomena behave according to the selected parameters included in the theory; and

- is typically specified in terms of its several parameters with the full knowledge that no empirical study or experiment could successfully and completely control all the complexities that might affect the designated parameters—theories are not specified in terms of what might be experimentally successful.

In this sense, a theory does not give an accurate characterization of the target phenomena—it predicts the progression of the modeled phase space over time, which is to say that it predicts a shift from one abstract replica to another under the assumed idealized conditions. Idealization could be in terms of the limited number of dimensions, assumed absence of effects of the many forces not included, mathematical formalization syntax, or the assumed bearing of various auxiliary hypotheses relating to theories of experiment, theories of data, and theories of numerical measurement. “If the theory is adequate it will provide an accurate characterization of what the phenomenon would have been had it been an isolated system” (Suppe, 1977, p. 224; my italics).

MODEL-CENTERED SCIENCE

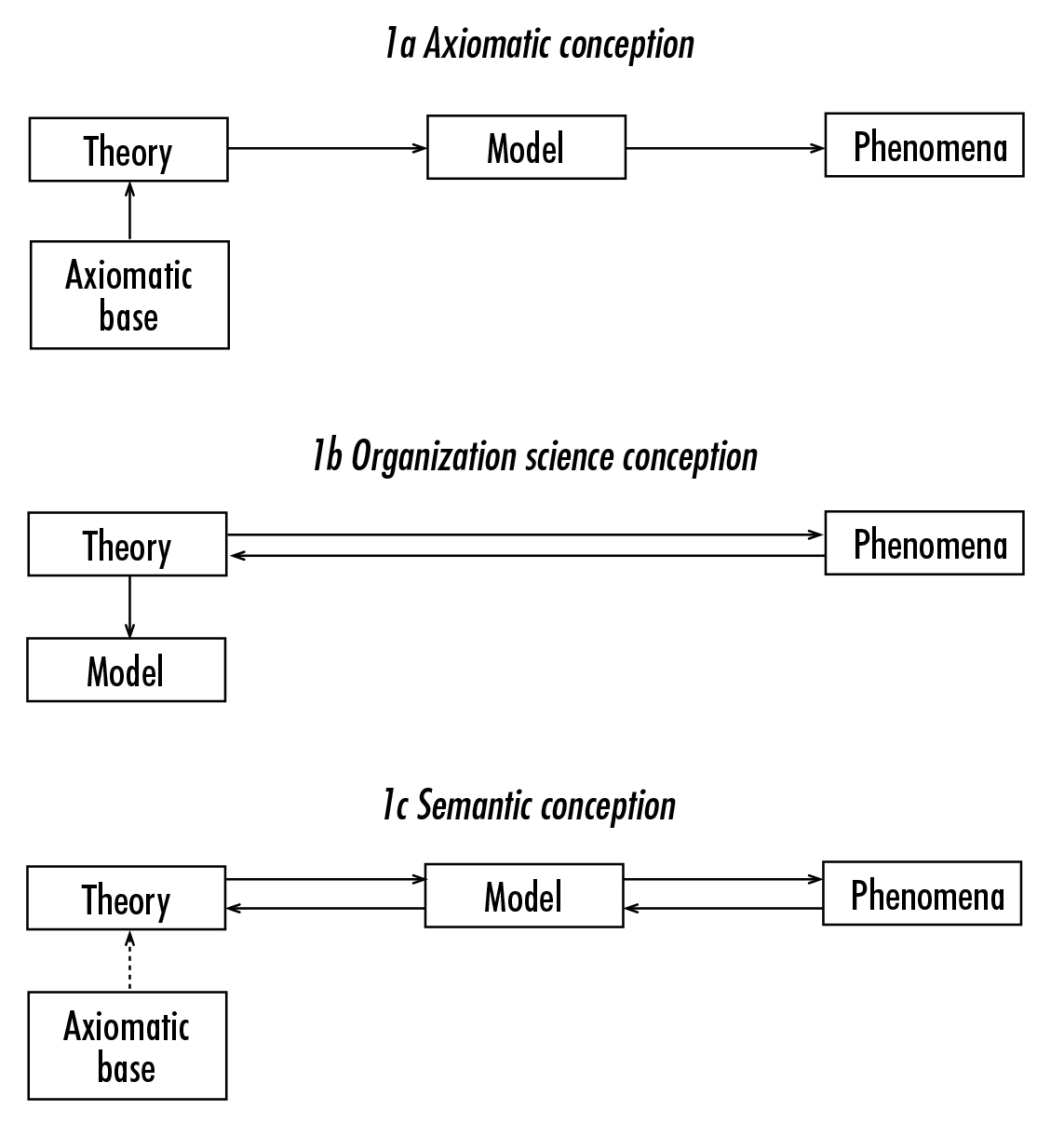

The central feature of the semantic conception is the pivotal role given to models. Figure 1 shows three views of the relation among theory, models, and phenomena. Figure 1a stylizes a typical axiomatic conception:

- A theory is developed from its axiomatic base.

- Semantic interpretation is added to make it meaningful in, say, physics, thermodynamics, or economics.

- The theory is used to make and test predictions about the phenomena.

- The theory is defined as experimentally and ontologically adequate if it both reduces to the axioms and is instrumentally reliable in predicting empirical results.

Figure 1b stylizes a typical organization science approach:

- A theory is induced after an investigator has gained an appreciation of some aspect of organizational behavior.

- An iconic model is often added to give a pictorial view of the interrelation of the variables or to show hypothesized path coefficients, or possibly a regression model is formulated.

- The model develops in parallel with the theory as the latter is tested for both experimental and ontological adequacy by seeing whether effects predicted by the theory can be discovered in some sampling of the phenomena.

Figure 1 Conceptions of the axiom–theory–model–phenomena relationship

Figure 1c stylizes the semantic conception:

- The theory, model, and phenomena are viewed as independent entities.

- Science is bifurcated into two independent but not unrelated truth-testing activities:

- experimental adequacy is tested by seeing whether the theory, stated as counterfactual conditionals, predicts the empirical behavior of the model (think of the model as an isolated idealized physical system moved into a laboratory or onto a computer);

- ontological adequacy is tested by comparing the isomorphism of the model's idealized structures/processes against that portion of the total relevant “real-world” phenomena defined as “within the scope of the theory.”

It is important to emphasize that in the semantic conception, “theory” is always hooked to and tested via the model. “Theory” does not attempt to explain “real-world” behavior; it only attempts to explain “model” behavior. It does its testing in the isolated, idealized physical world structured into the model. “Theory” is not considered a failure because it does not become elaborated and fully tested against all the complex effects characterizing the realworld phenomenon. The mathematical or computational model is used to structure up aspects of interest within the full complexity of the real-world phenomenon and defined as “within the scope” of the theory. Then the model is used to test the “if A then B” counterfactuals of the theory to consider how a firm—as modeled—might behave under various possibly occurring conditions. Thus a model would not attempt to portray all aspects of, say, notebook computer firms—only those within the scope of the theory being developed. And, if the theory did not predict all aspects of these firms' behaviors under the various relevant real-world conditions, it would not be considered a failure. However, this is only half the story.

Parallel to developing the experimental adequacy of the “theory-model” relationship is the activity of developing the ontological adequacy of the “model-phenomena” relationship. How well does the model represent or refer to the “real-world” phenomena? For example, how well does an idealized wind-tunnel model of an airplane wing represent the behavior of a full-sized wing on a plane flying in a storm? How well does a drug shown to work on “idealized” lab rats work on people of different ages, weights, and physiologies? How well might a computational model, such as the Kauffman (1993) NK model that Levinthal (1997a, b), Rivkin (1997, 1998), Baum (1999), and McKelvey (1999a, b) use, represent coevolutionary competition, that is, actually represent that kind of competition in, say, the notebook computer industry?

A primary difficulty encountered with the axiomatic conception is the presumption that only one fully adequate model derives from the underlying axioms—only one model can “truly” represent reality in a rigorously developed science. For some philosophers, therefore, a discipline such as evolutionary biology fails as a science. Instead of a single axiomatically rooted theory, as proposed by Williams (1970) and defended by Rosenberg (1985), evolutionary theory is more realistically seen as a family of theories, including theories explaining the mechanisms of natural selection, mechanisms of heredity, mechanisms of variation, and a taxonomic theory of species definition (Thompson, 1989, Ch. 1).

Since the semantic conception does not require axiomatic reduction, it tolerates multiple models. Thus, “truth” is not defined in terms of reduction to a single model. Mathematical, settheoretical, and computational models are considered equal contenders to represent real-world phenomena. In physics, both wave and particle models are accepted because they both produce instrumentally reliable predictions. That they also have different theoretical explanations is not considered a failure. Each is an isolated, idealized physical system representing different aspects of real-world phenomena. In evolutionary theory there is no single “theory” of evolution. There are in fact subordinate families of theories (multiple models) within the main families about natural selection, heredity, variation, and taxonomic grouping. Organization science also consists of various families of theories, each having families of competing theories within it—families of theories about process theory, population ecology, organizational culture, structural design, corporate performance, sustained competitive advantage, organizational change, and so on. Axiomatic reduction does not appear to be in sight for any of these theories. The semantic conception defines a model-centered science comprised of families of models without a single axiomatic root.

MODEL-CENTERED ORGANIZATION SCIENCE

If the semantic conception of science is defined as preferring formalized families of models, theory-model experimental tests, and the model-phenomena ontological tests, organization science generally misses the mark, although population ecology studies measure up fairly well (Hannan and Freeman, 1989; Hannan and Carroll, 1992). Truth-tests are typically defined in terms of a direct “theory-phenomena” corroboration, with the results that:

- Organization science does not have the bifurcated theory- model and model-phenomena tests.

- The strong counterfactual type of theory confirmation is seldom achieved because organization science attempts to predict realworld behavior rather than model behavior.

- Formal models are considered invalid because their inherent idealizations invariably fail to represent real-world complexity; that is, instrumental reliability is low.

Semantic conception philosophers do take pains to insist that their epistemology does not represent a shift away from the desirability of moving toward formalized (while not necessarily axiomatic) models. However, Suppe (1977, p. 228), for example, chooses the phase space foundation rather than set theory because it does not rule out qualitative models. In organization science there are a wide variety of formalized models (Carley, 1995), but in fact most organization and strategy theories are not formalized, as a reading of such basic sources as Clegg, Hardy, and Nord (1996), Donaldson (1996), Pfeffer (1997), and Scott (1998) readily demonstrates. In addition, these theories have low ontological adequacy, and if the testing of counterfactual conditionals is any indication, little experimental adequacy either.

Witchcraft, shamanism, astrology, and the like are notorious for attaching post hoc explanations to apparent regularities that are frequently accidental—“disaster struck in 1937 after the planets were lined up thus.” Nomic necessity—the requirement that one kind of protection against attempting to explain a possibly accidental regularity occurs when rational logic can point to a lawful relation between an underlying structure or force that, if present, would produce the regularity—is a necessary condition: “If force A then regularity B.” However, using an experimentally created result to test the “if A then B” counterfactual posed by the law in question is critically important. Experiments more than anything else separate science from witchcraft or antiscience. Without a program of experimental testing, complexity applications to organization science remain metaphorical and, if made the basis of consulting agendas and other managerially oriented advice, are difficult to distinguish from witchcraft.

An exemplar scientific program is Kauffman's 25 years or so of work on his “complexity may thwart selection” hypothesis, summarized in his 1993 book. He presents numerous computational experiments and the model structures and results of these are systematically compared with the results of vast numbers of other experiments carried out by biologists over the years. Should we accept complexity applications to management as valid without a similar course of experiments having taken place?

Agreed, the “origin of life” question is timeless, so Kauffman's research stream is short by comparison. No doubt some aspects of management change radically over only a few years. On the other hand, many organizational problems, such as centralization- decentralization, specialization-generalization, environmental fit, learning, and change, seem timeless, are revisited over and over, and could easily warrant longer research programs. Computational models have already been applied to these and other issues, as Carley's review indicates. But the surface has hardly been scratched—the coevolution of the theory-model and model-phenomena links has barely begun the search for the optimum match of organization theory, model, and real world.

AGENT-BASED MODELS

It is clear from the literature described in Nicolis and Prigogine (1989), Kaye (1993), Kauffman (1993), Mainzer (1994), and Favre et al. (1995) that natural science-based complexity theory fits the semantic conception's rewriting of how effective science works. There is now a considerable natural science literature of formalized mathematical and computational theory on the one hand, and many tests of the adequacy of the theoretical models to real-world phenomena on the other. A study of the literature emanating from the Santa Fe Institute (Kauffman, 1993; Cowan, Pines, and Meltzer, 1994; Gumerman and Gell-Mann, 1994; Belew and Mitchell, 1996; Arthur, Durlauf, and Lane, 1997) shows that although social science applications lag in their formalized modelcenteredness, the trend is in this direction.

Despite Carley's citing of over 100 papers using models in her 1995 review paper, mainstream organization science cannot be characterized as a model-centered science. There seems to be a widespread phobia against linear differential equations, the study of rates, and mathematical formalization in general. A more suitable and more accessible alternative may be adaptive learning agent-based models. The fact that these models are not closed-form solutions is an imperfection, but organization scientists recognize that equations may be equally imperfect due to the heroic assumptions required to make them mathematically tractable. Is one kind of imperfection better or worse than any other? Most of the studies cited by Carley do not use agent-based models.

I began this article with a section on process-level theory, with special attention paid to organizational microstates, in order to set up my call here for more emphasis on agent-based models. This follows leads taken by Cohen, March, and Olsen (1972), March (1991), Carley (1992, 1997), Carley and Newell (1994), Carley and Prietula (1994), Carley and Svoboda (1996), Carley and Lee (1997), Masuch and Warglien (1992), and Warglien and Masuch (1996), among others. Adaptive learning models assume that agents have stochastic nonlinear behaviors and that these agents change over time via stochastic nonlinear adaptive improvements. Assumptions about agent attributes or capabilities may be as simple as one rule—“Copy another agent or don't.” Computational modelers assume that the aggregate adaptive learning “intelligence,” capability, or behavior of an organization may be effectively represented by millions of “nanoagents” in a combinatorial search space—much like intelligence in our brains appears as a network of millions of neurons, each following a simple firing rule. Needless to say, complexity science researchers recognize that computational models not only fit the basic stochastic nonlinear microstate assumption, but also simulate various kinds of aggregate physical and social system behaviors.

Although relatively unknown in organization science, “interactive particle systems,” “particle,” “nearest neighbor,” or, more generally, adaptive-learning agent-based models are well known in the natural sciences. Whatever the class of model, very simpleminded agents adopt a neighboring agent's attributes to reduce energy or gain fitness. The principal modeling classes are spinglass (Mézard, Parisi, and Virasoro, 1987; Fischer and Hertz, 1993), simulated annealing (Arts and Korst, 1989), cellular automata (Toffoli and Margolus, 1987; Weisbuch, 1993), neural network (Wasserman, 1989, 1993; Müller and Reinhardt, 1990; Freeman and Skapura, 1993), genetic algorithm (Holland, 1975, 1995; Goldberg, 1989; Mitchell, 1996), and, most recently, population games (Blume, 1997). For a broader review see Garzon (1995). Most model applications stay within one class, though Carley's (1997) “ORGAHEAD” model uses models from several classes in a hierarchical arrangement. More specifically within the realm of complexity applications to firms, Kauffman's (1993) NK model and rugged landscape have attracted attention (Levinthal, 1997a, b; Levinthal and Warglien, 1997; Rivkin, 1997, 1998; Sorenson, 1997; Baum, 1999; and McKelvey, 1999a, b). These are all theory-model experimental adequacy studies, although Sorenson's study is a model-phenomena ontological test.

CONCLUSION

If we are to have an effective complexity science applied to firms, we should first see a systematic agenda linking theory development with mathematical or computational model development. The counterfactual tests are carried out via the theory-model link. We should also see a systematic agenda linking model structures with real-world structures. The tests of the model-phenomena link focus on how well the model refers, that is, represents real-world behavior. Without evidence that both of these agendas are being actively pursued, there is no reason to believe that we have a complexity science of firms.

A cautionary note: Even if the semantic conception program is adopted, organization science still seems likely to suffer in instrumental reliability compared to the natural sciences. The “isolated, idealized physical systems” of natural science are more easily isolated and idealized, and with lower cost to reliability, than are socioeconomic systems. Natural science lab experiments more reliably test nomic-based counterfactual conditionals and have much higher ontological representative accuracy. In other words, its “closed systems” are less different from its “open systems” than is the case for socioeconomic systems. This leads to their higher instrumental reliability.

The good news is that the semantic conception makes improving instrumental reliability easier to achieve. The benefit stems from the bifurcation of scientific activity into independent tests for experimental adequacy and ontological adequacy. First, by having one set of scientific activities focus only on the predictive aspects of a theory-model link, the chances improve of finding models that test counterfactuals with higher experimental instrumental reliability—the reliability of predictions increases. Second, by having the other set of scientific activities focus only on the model structures across the model-phenomena link, ontological instrumental reliability will also improve. For these activities, reliability hinges on the isomorphism of the structures causing both model and real-world behavior, not on whether the broader predictions across the full range of the complex phenomena occur with high probability. Thus, in the semantic conception instrumental reliability now rests on the joint probability of two elements:

- predictive experimental reliability; and

- model structure reliability.

If a science is not centered around (preferably) formalized computational or mathematical models, it has little chance of being effective or adequate. Such is the message of late twentieth-century (postpositivist) philosophy of science. This message tells us very clearly that in order for an organizational complexity science to avoid faddism and scientific discredit, it must become modelcentered.

NOTES

- A review of some 30 “complexity theory and management” books, coedited by Stu Kauffman, Steve Maguire, and Bill McKelvey, will appear in a special review issue of Emergence (1999, #2).

- I substitute “experimental” for the term “empirical” that van Fraassen uses so as to distinguish more clearly between the testing of counterfactuals (which could be via formal models and laboratory or computer experiments) and empirical (real-world) reference or representativeness—both of which figure in van Fraassen's use of “empirical.”

References

Aarts, E. and Korst, J. (1989) Simulated Annealing and Boltzmann Machines, New York: Wiley.

Abbott, A. (1990) “A Primer on Sequence Methods,” Organization Science, 1, 373-93.

Anderson, PW, Arrow, K.J. and Pines, D. (eds) (1988) The Economy as an Evolving Complex System, Proceedings of the Santa Fe Institute Vol. V, Reading, MA: Addison-Wesley.

Aronson, J.L., Harre, R. and Way, E.C. (1994) Realism Rescued, London: Duckworth.

Arthur, W.B., Durlauf, S.N. and Lane, D.A. (eds) (1997) The Economy as an Evolving Complex System, Proceedings Vol. XXVII, Reading, MA: Addison- Wesley.

Bacharach, S.B. (1989) “Organization Theories: Some Criteria for Evaluation,” Academy of Management Review, 14, 496-515.

Baum, J.A.C. (1999) “Whole-Part Coevolutionary Competition in Organizations,” in J.A.C. Baum and B. McKelvey (eds), Variations in Organization Science: in Honor of Donald T. Campbell, Thousand Oaks, CA: Sage.

Beatty, J. (1981) “What's Wrong with the Received View of Evolutionary Theory?” in PD. Asquith and R.N. Giere (eds), PSA 1980, Vol. 2, East Lansing, MI: Philosophy of Science Association, 397-426.

Beatty, J. (1987) “On Behalf of the Semantic View,” Biology and Philosophy, 2, 17-23.

Belew, R.K. and M. Mitchell (eds) (1996) Adaptive Individuals in Evolving Populations, Proceedings Vol. XXVI, Reading, MA: Addison-Wesley.

Beth, E. (1961) “Semantics of Physical Theories,” in H. Freudenthal (ed.), The Concept and the Role of the Model in Mathematics and Natural and Social Sciences, Dordrecht: Reidel, 48-51.

Bhaskar, R. (1975) A Realist Theory of Science, London: Leeds Books [2nd edn published by Verso (London) 1997].

Blaug, M. (1980) The Methodology of Economics, New York: Cambridge University Press.

Blume, L.E. (1997) “Population Games,” in W.B. Arthur, S.N. Durlauf and D.A. Lane (eds), The Economy as an Evolving Complex System, Proceedings of the Santa Fe Institute, Vol. XXVII, Reading, MA: Addison-Wesley, 425-60.

Burrell, G. (1996), “Normal Science, Paradigms, Metaphors, Discourses and Genealogies of Analysis,” in S.R. Clegg, C. Hardy and WR. Nord (eds), Handbook of Organization Studies, Thousand Oaks, CA: Sage, 642-58.

Burrell, G. and Morgan, G. (1979) Sociological Paradigms and Organizational Analysis, London: Heinemann.

Camerer, C. (1985) “Redirecting Research in Business Policy and Strategy,” Strategic Management Journal, 6, 1-15.

Camerer, C. (1995) “Individual Decision Making,” in J.H. Kagel and A.E. Roth (eds), The Handbook of Experimental Economics, Princeton, NJ: Princeton University Press, 587-703.

Campbell, D.T. (1974), “Evolutionary Epistemology,” in PA. Schilpp (ed.), The Philosophy of Karl Popper (Vol. 14, I. & II), The Library of Living Philosophers, La Salle, IL: Open Court. [Reprinted in G. Radnitzky and W.W. Bartley, III (eds), Evolutionary Epistemology, Rationality, and the Sociology of Knowledge, La Salle, IL: Open Court, 47-89.]

Campbell, D.T. (1988), “A General 'Selection Theory' as Implemented in Biological Evolution and in Social Belief-Transmission-with-Modification in Science,” Biology and Philosophy, 3, 171-7.

Campbell, D.T. (1995) “The Postpositivist, Non-Foundational, Hermeneutic Epistemology Exemplified in the Works of Donald W. Fiske,” in PE. Shrout and S.T. Fiske (eds), Personality Research, Methods and Theory: A Festschrift Honoring Donald W. Fiske, Hillsdale, NJ: Erlbaum, 13-27.

Carley, K.M. (1992) “Organizational Learning and Personnel Turnover,” Organization Science, 3, 20-46.

Carley, K.M. (1995) “Computational and Mathematical Organization Theory: Perspective and Directions,” Computational and Mathematical Organization Theory, 1, 39-56.

Carley, K.M. (1997), “Organizational Adaptation in Volatile Environments,” unpublished working paper, Department of Social and Decision Sciences, H.J. Heinz III School of Public Policy and Management, Carnegie Mellon University, Pittsburgh, PA.

Carley, K.M. and Lee, J. (1997) “Dynamic Organizations: Organizational Adaptation in a Changing Environment,” unpublished working paper, Department of Social and Decision Sciences, H.J. Heinz III School of Public Policy and Management, Carnegie Mellon University, Pittsburgh, PA.

Carley, K.M. and Newell, A. (1994) “The Nature of the Social Agent,” Journal of Mathematical Sociology, 19, 221-62.

Carley, K.M. and Prietula, M.J. (eds) (1994), Computational Organization Theory, Hillsdale, NJ: Erlbaum.

Carley, K.M. and Svoboda, D.M. (1996) “Modeling Organizational Adaptation as a Simulated Annealing Process,” Sociological Methods and Research, 25, 138-68.

Chia, R. (1996) Organizational Analysis as Deconstructive Practice, Berlin: Walter de Gruyter.

Clegg, S.R., Hardy, C. and Nord, W.R. (eds) (1996) Handbook of Organization Studies, Thousand Oaks, CA: Sage.

Cohen, J. and Stewart, I. (1994) The Collapse of Chaos: Discovering Simplicity in a Complex World, New York: Viking.

Cohen, M.D., March, J.G. and Olsen, J.I? (1972) “A Garbage Can Model of Organizational Choice,” Administrative Science Quarterly, 17, 1-25.

Cowan, G.A., Pines, D. and Meltzer, D. (eds) (1994) Complexity: Metaphors, Models, and Reality, Proceedings Vol. XIX, Reading, MA: Addison-Wesley.

Cramer, F (1993) Chaos and Order: The Complex Structure of Living Things (trans. D. L. Loewus), New York: VCH.

De Regt, C.D.G. (1994) Representing the World by Scientific Theories: The Case for Scientific Realism, Tilburg: Tilburg University Press.

Devitt, M. (1984) Realism and Truth, Oxford: Oxford University Press.

Donaldson, L. (1996) For Positivist Organization Theory, Thousand Oaks, CA: Sage.

Epstein, J.M. and Axtell, R. (1996) Growing Artificial Societies: Social Science from the Bottom Up, Cambridge, MA: MIT Press.

Favre, A., Guitton, H., Guitton, J., Lichnerowicz, A. and Wolff, E. (1995) Chaos and Determinism (trans. B.E. Schwarzbach), Baltimore: Johns Hopkins University Press.

Fischer, K.H. and Hertz, J.A. (1993) Spin Glasses, New York: Cambridge University Press.

Freeman, J.A. and Skapura, D.M. (1992) Neural Networks: Algorithms, Applications, and Programming Techniques, Reading, MA: Addison-Wesley.

Garzon, M. (1995) Models of Massive Parallelism, Berlin: Springer-Verlag.

Goldberg, D.E. (1989) Genetic Algorithms in Search, Optimization and Machine Learning, Reading, MA: Addison-Wesley.

Gumerman, G.J. and Gell-Mann, M. (eds) (1994) Understanding Complexity in the Prehistoric Southwest, Proceedings Vol. XVI, Reading, MA: Addison-Wesley.

Hahlweg, K. and Hooker, C.A. (eds) (1989) Issues in Evolutionary Epistemology, New York: State University of New York Press.

Hannan, M.T. and Carroll, G.R. (1992) Dynamics of Organizational Populations, New York: Oxford University Press.

Hannan, M.T. and Freeman, J. (1989) Organizational Ecology, Cambridge, MA: Harvard University Press.

Hausman, D.M. (1992) Essays on Philosophy and Economic Methodology, New York: Cambridge University Press.

Hogarth, R.M. and Reder, M.W (eds) (1987) Rational Choice: the Contrast Between Economics and Psychology, Chicago, IL: University of Chicago Press.

Holland, J. (1975) Adaptation in Natural and Artificial Systems, Ann Arbor, MI: University of Michigan Press.

Holland, J.H. (1995) Hidden Order, Reading, MA: Addison-Wesley.

Homans, G.C. (1950) The Human Group, New York: Harcourt.

Hunt, S.D. (1991) Modern Marketing Theory: Critical Issues in the Philosophy of Marketing Science, Cincinnati, OH: South-Western.

Kauffman, S.A. (1993) The Origins of Order: Self-Organization and Selection in Evolution, New York: Oxford University Press.

Kaye, B. (1993) Chaos and Complexity, New York: VCH.

Levinthal, D.A. (1997a) “Adaptation on Rugged Landscapes,” Management Science, 43, 934-50.

Levinthal, D.A. (1997b) “The Slow Pace of Rapid Technological Change: Gradualism and Punctuation in Technological Change,” unpublished manuscript, The Wharton School, University of Pennsylvania, Philadelphia, PA.

Levinthal, D.A. and Warglien, M. (1997) “Landscape Design: Designing for Local Action in Complex Worlds,” unpublished manuscript, The Wharton School, University of Pennsylvania, Philadelphia, PA.

Lloyd, E.A. (1988) The Structure and Confirmation of Evolutionary Theory, Princeton, NJ: Princeton University Press.

Mackenzie, K.D. (1986) Organizational Design: the Organizational Audit and Analysis Technology, Norwood, NJ: Ablex.

Mainzer, K. (1994) Thinking in Complexity: The Complex Dynamics of Matter, Mind, and Mankind, New York: Springer-Verlag.

March, J.G. (1991) “Exploration and Exploitation in Organizational Learning,” Organization Science, 2, 71-87.

Masuch, M. and Warglien, M. (1992) Artificial Intelligence in Organization and Management Theory, Amsterdam: Elsevier Science.

McKelvey, B. (1997) “Quasi-natural Organization Science,” Organization Science, 8, 351-80.

McKelvey, B. (1999a) “Avoiding Complexity Catastrophe in Coevolutionary Pockets: Strategies for Rugged Landscapes,” Organization Science (special issue on Complexity Theory).

McKelvey, B. (1999b) “Self-Organization, Complexity Catastrophes, and Microstate Models at the Edge of Chaos,” in J.A.C. Baum and B. McKelvey (eds), Variations in Organization Science: in Honor of Donald T. Campbell, Thousand Oaks, CA: Sage.

McKelvey, B. (1999c) “Toward a Campbellian Realist Organization Science,” in J.A.C. Baum and B. McKelvey (eds), Variations in Organization Science: in Honor of Donald T. Campbell, Thousand Oaks, CA: Sage. Merriam Webster's Collegiate Dictionary (1996) (10th edn), Springfield, MA: Merriam-Webster.

Mezard, M., Parisi, G. and Vivasoro, M.A. (1987) Spin Glass Theory and Beyond, Singapore: World Scientific.

Micklethwait, J. and Wooldridge, A. (1996) The Witchdoctors: Making Sense of the Management Gurus, New York: Times Books.

Mitchell, M. (1996) An Introduction to Genetic Algorithms, Cambridge, MA: MIT Press.

Montgomery, C.A., Wernerfelt, B. and Balakrishnan, S. (1989) “Strategy Content and the Research Process: A Critique and Commentary,” Strategic Management Journal, 10, 189-97.

Muller, B. and Reinhardt, J. (1990) Neural Networks, New York: Springer-Verlag.

Nicolis, G. and Prigogine, I. (1989) Exploring Complexity: an Introduction, New York: Freeman.

Nola, R. (1988) Relativism and Realism in Science, Dordrecht: Kluwer.

Parsons, T. (1960) Structure and Process in Modern Societies, Glencoe, IL: Free Press.

Peters, R.H. (1991) A Critique for Ecology, Cambridge: Cambridge University Press.

Pfeffer, J. (1982) Organizations and Organization Theory, Boston, MA: Pitman.

Pfeffer, J. (1993) “Barriers to the Advancement of Organizational Science: Paradigm Development as a Dependent Variable,” Academy of Management Review, 18, 599-620.

Pfeffer, J. (1997) New Directions for Organization Theory, New York: Oxford University Press.

Popper, K.R. (1982) Realism and the Aim of Science [From the Postscript to the Logic of Scientific Discovery], W.W. Bartley III (ed.), Totowa, NJ: Rowman and Littlefield.

Porter, M.E. (1985) Competitive Advantage, New York: Free Press.

Prahalad, C.K. and Hamel, G. (1990) “The Core Competence of the Corporation,” Harvard Business Review, 68, 78-91.

Prigogine, I. and Stengers, I. (1984) Order out of Chaos: Man's New Dialogue with Nature, New York: Bantam.

Redman, D.A. (1991) Economics and the Philosophy of Science, New York: Oxford University Press.

Reed, M. and Hughes, M. (eds) (1992) Rethinking Organization: New Directions in Organization Theory and Analysis, London: Sage.

Rescher, N. (1970) Scientific Explanation, New York: Collier-Macmillan.

Rescher, N. (1987) Scientific Realism: a Critical Reappraisal, Dordrecht: Reidel.

Rivkin, J. (1997) “Imitation of Complex Strategies,” presented at the Academy of Management Meeting, Boston, MA.

Rivkin, J. (1998) “Optimally Suboptimal Organizations: Local Search on Complex Landscapes,” presented at Academy of Management Meeting, San Diego, CA.

Rosenberg, A. (1985) The Structure of Biological Science, Cambridge: Cambridge University Press.

Sanchez, R. (1993) “Strategic Flexibility, Firm Organization, and Managerial Work in Dynamic Markets: a Strategic Options Perspective,” Advances in Strategic Management, 9, 251-91.

Schrodinger, E. (1944) What is Life: the Physical Aspect of the Living Cell, Cambridge: Cambridge University Press.

Scott, W.R. (1995) Institutions and Organizations, Thousand Oaks, CA: Sage.

Scott, WR. (1998) Organizations: Rational, Natural, and Open Systems (4th edn), Englewood Cliffs, NJ: Prentice-Hall.

Silverman, D. (1971) The Theory of Organisations, New York: Basic Books.

Sorenson, O. (1997) “The Complexity Catastrophe in the Evolution in the Computer Industry: Interdependence and Adaptability in Organizational Evolution,” unpublished PhD dissertation, Sociology Department, Stanford University, Stanford, CA.

Staw, B.M. (ed.) (1991) Psychological Dimensions of Organizational Behavior, Englewood Cliffs, NJ: Prentice-Hall.

Suppe, F (1967) “The Meaning and Use of Models in Mathematics and the Exact Sciences,” unpublished PhD dissertation, University of Michigan, Ann Arbor.

Suppe, F (1977) The Structure of Scientific Theories (2nd edn), Chicago: University of Chicago Press.

Suppe, F. (1989) The Semantic Conception of Theories and Scientific Realism, Urbana-Champaign, IL: University of Illinois Press.

Suppes, P (1961) “A Comparison of the Meaning and Use of Models in Mathematics and the Empirical Sciences,” in H. Freudenthal (ed.), The Concept and the Role of the Model in Mathematics and Natural and Social Sciences, Dordrecht: Reidel, 163-77.

Suppes, P (1962) “Models of Data,” in E. Nagel, P Suppes, and A. Tarski (eds), Logic, Methodology, and Philosophy of Science: Proceedings of the 1960 International Congress, Stanford, CA: Stanford University Press, 252-61.

Suppes, P (1967) “What is Scientific Theory?” in S. Morgenbesser (ed.), Philosophy of Science Today, New York: Meridian, 55-67.

Sutton, R.I. and Staw, B.M. (1995) “What Theory Is Not,” Administrative Science Quarterly, 40, 371-84.

Teece, D. J., Pisano, G. and Shuen, A. (1994) “Dynamic Capabilities and Strategic Management,” CCC working paper #94-9, Center for Research in Management, University of California Berkeley.

Thompson, P (1989) The Structure of Biological Theories, Albany, NY: State University of New York Press.

Toffoli, T. and Margolus, N. (1987) Cellular Automata Machines, Cambridge, MA: MIT Press.

Van de Ven, A.H. (1992) “Suggestions for Studying Strategy Process: a Research Note,” Strategic Management Journal, 13: 169-88.

van Fraassen, B.C. (1970) “On the Extension of Beth's Semantics of Physical Theories,” Philosophy of Science, 37, 325-39.

van Fraassen, B.C. (1980) The Scientific Image, Oxford: Clarendon.

Warglien, M. and Masuch, M. (eds) 1995) The Logic of Organizational Disorder, Berlin: Walter de Gruyter.

Wasserman, ED. (1989) Neural Computing: Theory and Practice, New York: Van Nostrand Reinhold.

Wasserman, PD. (1993) Advanced Methods in Neural Computing, New York: Van Nostrand Reinhold.

Weick, K.E. (1995) Sensemaking in Organizations, Thousand Oaks, CA: Sage.

Weisbuch, G. (1993) Complex Systems Dynamics: an Introduction to Automata Networks (trans. S. Ryckebusch), Lecture Notes Vol. II, Santa Fe Institute, Reading, MA: Addison-Wesley.

Wernerfelt, B. (1984) “A Resource-Based View of the Firm,” Strategic Management Journal, 5, 171-80.

Williams, M.B. (1970) “Deducing the Consequences of Evolution: a Mathematical Model,” Journal of Theoretical Biology, 29, 343-85.

Zucker, L.G. (1988) Institutional Patterns and Organizations: Culture and Environment, Cambridge, MA: Ballinger.